Bing's ChatGPT experiment is deeply flawed, and is the future of search

Microsoft’s addition of a ChatGPT interface to Bing could revolutionize search at some point, but given the nonsense it can spew now, it looks like it will take years before it becomes actually useful.

For months, OpenAI’s ChatGPT has gotten a big spotlight from tech reporters. As a chatbot capable of using information scraped from the Internet and used to create plausible-sounding answers to users’ questions, it has a lot of potential.

With a considerable cash infusion and a long working relationship with Microsoft, it’s no surprise that Redmond wants to infuse these smarts with its products in various ways.

The addition of the technology to its Office productivity suite is still on the way, but Microsoft is also betting that Bing, its search engine, could benefit from the same thing.

With Google doing its own called “Bard,” it seems like Microsoft’s not alone with thinking about enhancing search. Hooking up ChatGPT to a search engine should be a successful combination, in theory.

In reality, there’s still a lot of work to be done. Don’t underestimate what we’re saying here — it needs a ridiculous amount of work, not just technically, but on the foundation, as well.

What is the new Bing?

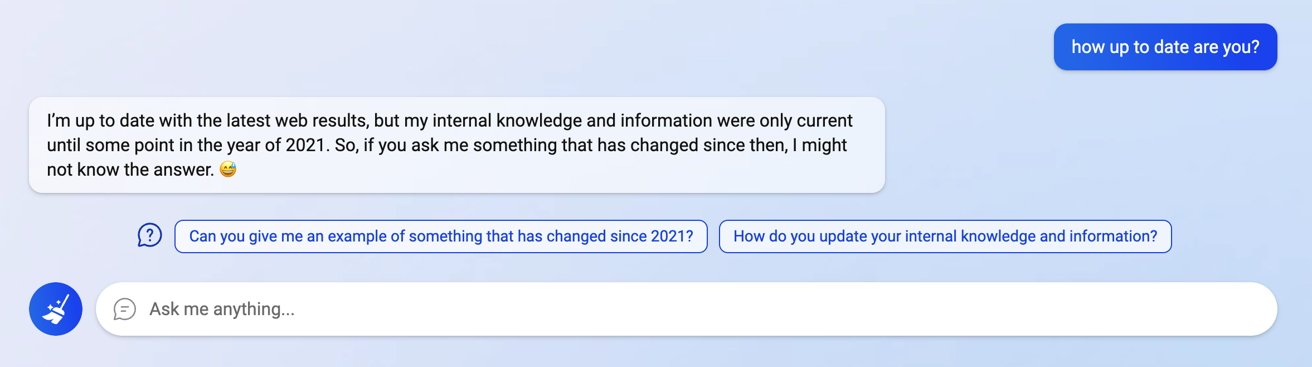

Titled “the new Bing” by Microsoft, Bing’s Chat aims to provide direct answers to questions.

A normal search engine query relies on users entering keywords to get back a list of relevant results, offered in the form of links to web pages.

AI chat systems like ChatGPT, as used by Microsoft in Bing, instead attempt to give an actual answer to a query on the page itself. Rather than wading through many links to find the right answer to your search, the bot instead will craft an understandable answer to the question.

Much like a human answering a question, a bot will draw on its compiled resources, namely data scraped from the Internet, and create a straightforward response in one go.

Since the question can be a complete sentence with qualifying elements to fine-tune the response itself, this processing can also lean into the creative side and can give the appearance of the bot “thinking” of an answer just for you.

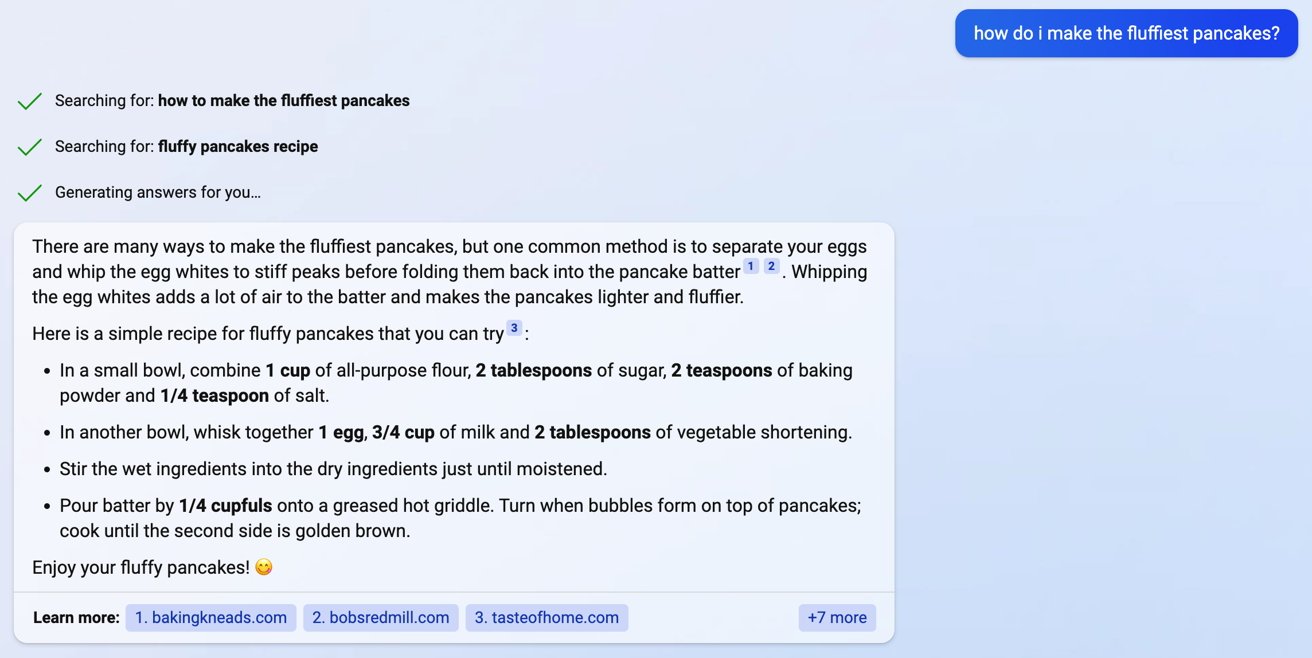

In theory, if you want a recipe for fluffy pancakes, the ideal AI chatbot would use its knowledge of pancake recipes to determine the set of ingredients and how much of each to use, as well as the method of cooking.

A search engine may give you a link to a known recipe. An AI chatbot has the potential to come up with its own on the fly that has never been made before.

That’s both good, and terrible, simultaneously.

Jumping through hoops

For the initial introduction of the Bing enhancement, Microsoft didn’t open it up to all users. You had to join a waitlist before you’d be allowed to try out the new service.

Of course, if you played ball with Microsoft, you could get ahead of the rest of the field. If you agreed to set Microsoft’s services as the default for all of the options and installed Bing, you’d be placed further ahead in the list.

Eventually, an email comes through saying you’re passed through the list, and can therefore use the chat option. Except it’s only available under limited circumstances.

For a start, you must be using Microsoft Edge. You could be logged into Bing via a different browser, but the chat system will only work for Bing users.

You can’t do it on mobile either, but Microsoft says it is working on that.

In the future, you will be able to use the AI chat system on any browser, but no one outside of Microsoft will know when that will be.

Accessing the extra options

After you’ve got Edge installed on the Mac and you’ve signed into Bing, accessing the chatbot isn’t that hard.

The main way to the full-fat experience is to click on the Chat link at the top of the page. This brings you to a full-screen page, offering the kind of things you can ask of the tool, and a simple search box stating “Ask me anything.”

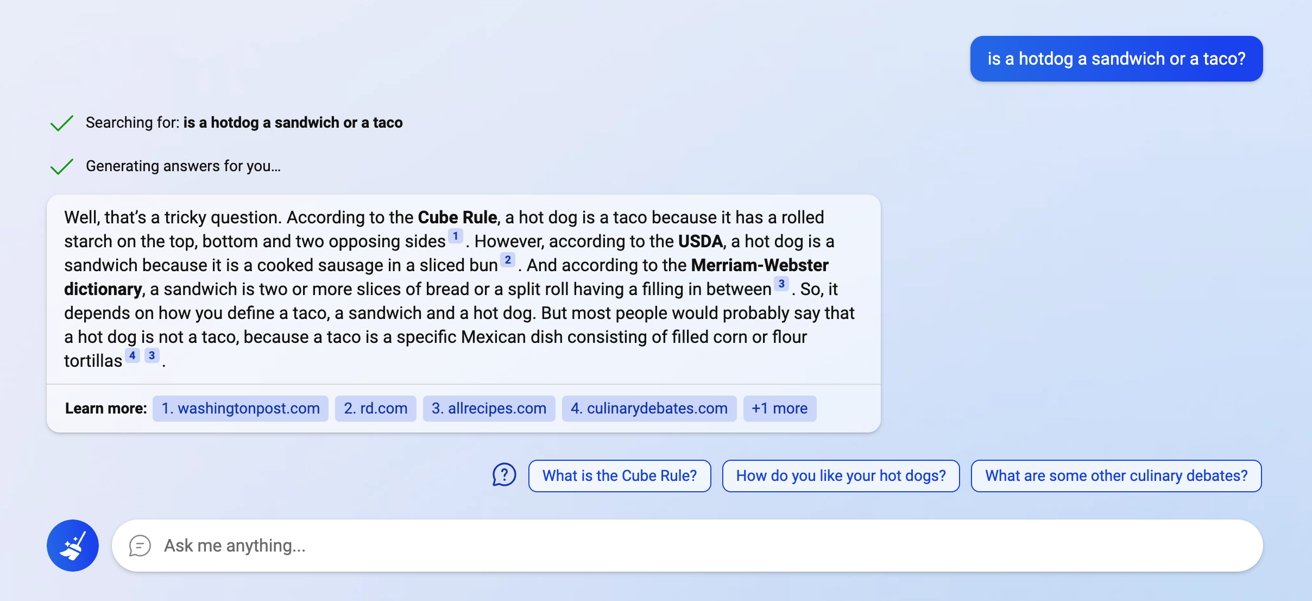

On entering a query in the box, the page will process for a bit, before bringing up a response that could be multiple paragraphs long.

Throughout the response, as well as below, you’ll find a list of references that the bot is leaning on for some of its result, if applicable. Clicking on these will bring up more information, and also take you to the source of that data point.

While the response is being output on the screen, you are also presented with a stop button, which will interrupt the chat bot’s flow and allow you to change your query. After the stoppage or if it’s finished, you can also ask follow-up questions using the text box, or select one of the suggested query options.

A broom icon button can be clicked for a “new topic,” so your queries are treated as fresh and not following on from earlier questions.

You can also get results from the chat service through regular search queries. A box to the side of results will show the same chatbot creating its response, effectively summarizing the swathe of links right next to it.

Getting results, or not

The results that come up from the chatbot can be straightforward, but also unexpected.

Take the example of fluffy pancake recipes. Bing responds with a short list of instructions, complete with the weights and measures for ingredients that can plausibly be used in cooking the pancakes.

However, while this instruction list is something you’d expect to be copied and pasted from an established recipe, it’s actually been sensibly embellished.

The list of instructions, as linked by Bing, stems from a site called “Taste of Home,” with the relevant details highlighted on the page. But, while the source doesn’t include measurements in the directions, Bing inserts its own into that text.

Furthermore, there is also a difference of opinion on how much each of those measurements should be.

Bing suggests two tablespoons of sugar while the original recipe mentions one, while the search engine recommends only a quarter teaspoon of salt to the half used in the recipe. The quarter-cup of shortening is switched out for two tablespoons, too.

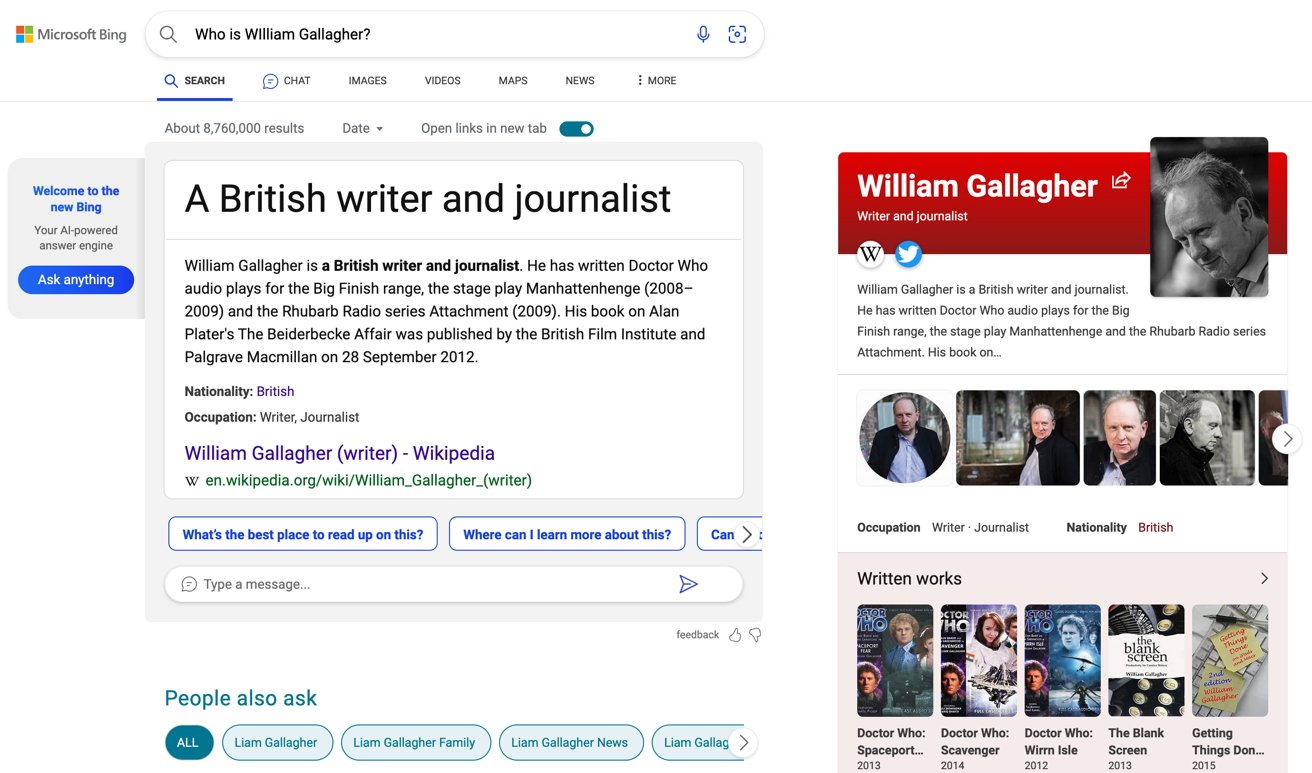

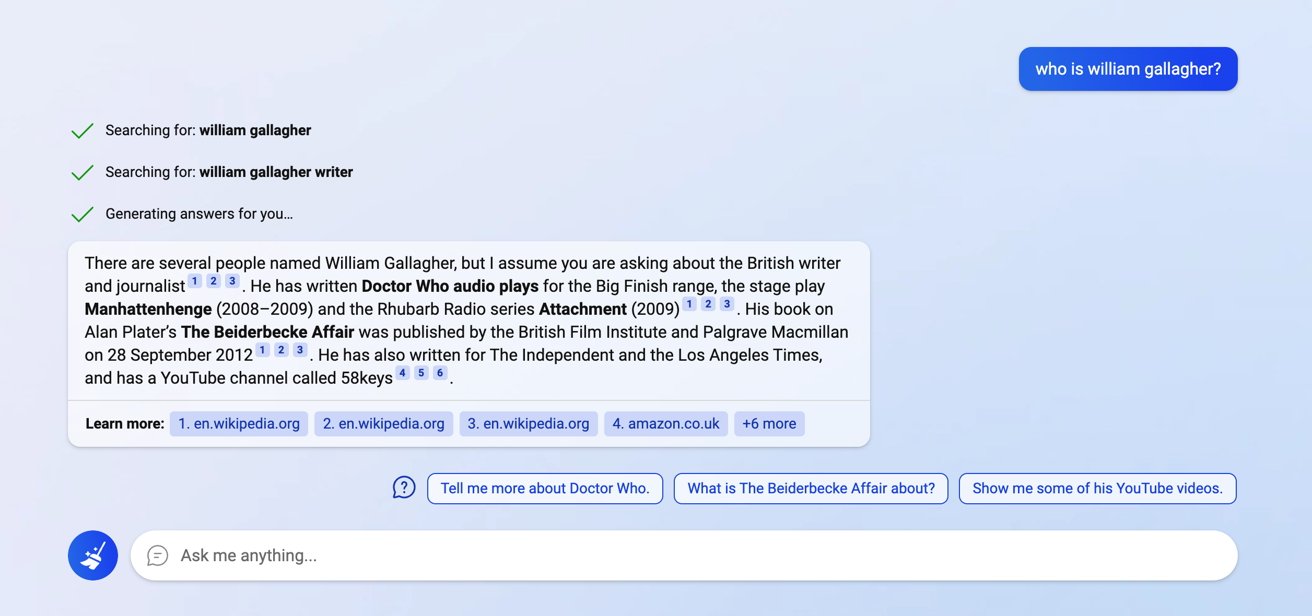

Straightforward queries involving people, places, and things can also be quite well answered. Asking about AppleInsider editorial member William Gallagher comes up with a result that assumes we’re talking about the “British writer and journalist,” and his various accomplishments.

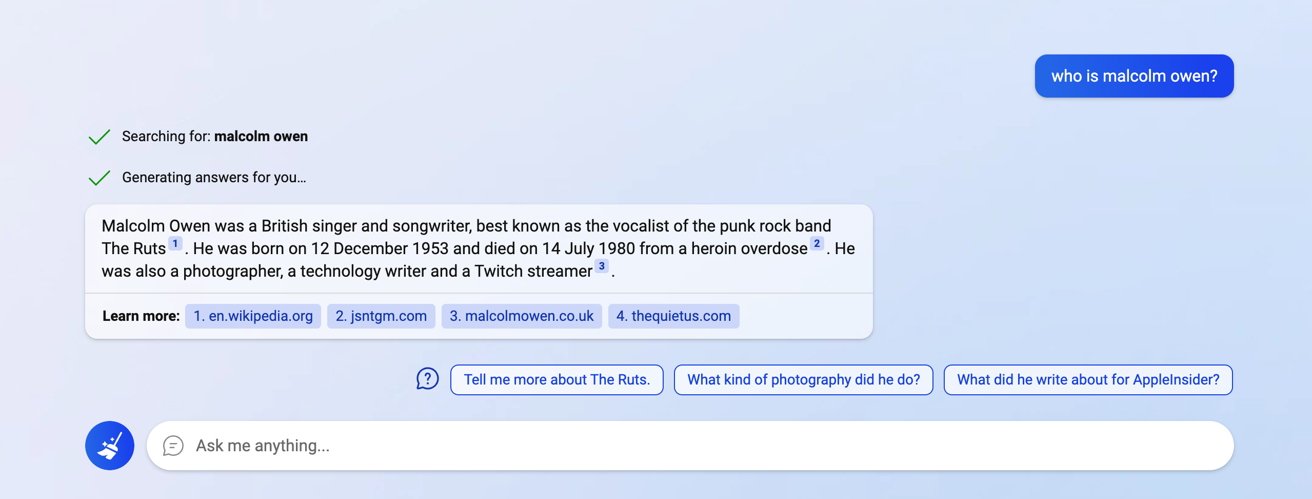

Asking about your author here, Malcolm Owen, is less useful. For a start, it confuses the AppleInsider Malcolm Owen with the departed singer from the punk band The Ruts.

Within two sentences, it mentions he died in 1980 from a heroin overdose, then adds he was “also a photographer, a technology writer and a Twitch streamer.”

These latter facts are true about the living person of that name, but there isn’t a distinction between the two. And, it should be obvious that the two have been conflated, given Twitch didn’t launch until more than three decades after the musician’s death.

A conversation about a runny nose eventually ends up with a list of home remedies that could be tried. Given the potential inaccuracies of such chat systems, the result was agreeable, but with the addition that calling a doctor may be a good idea in extreme circumstances.

Getting creative

Since there’s a level of creative freedom at play, you can expect the AI to try to follow rules and conventions it picked up in its web-scraping to create an ideal response.

Depending on how forgiving you are, this is a mixed bag of results.

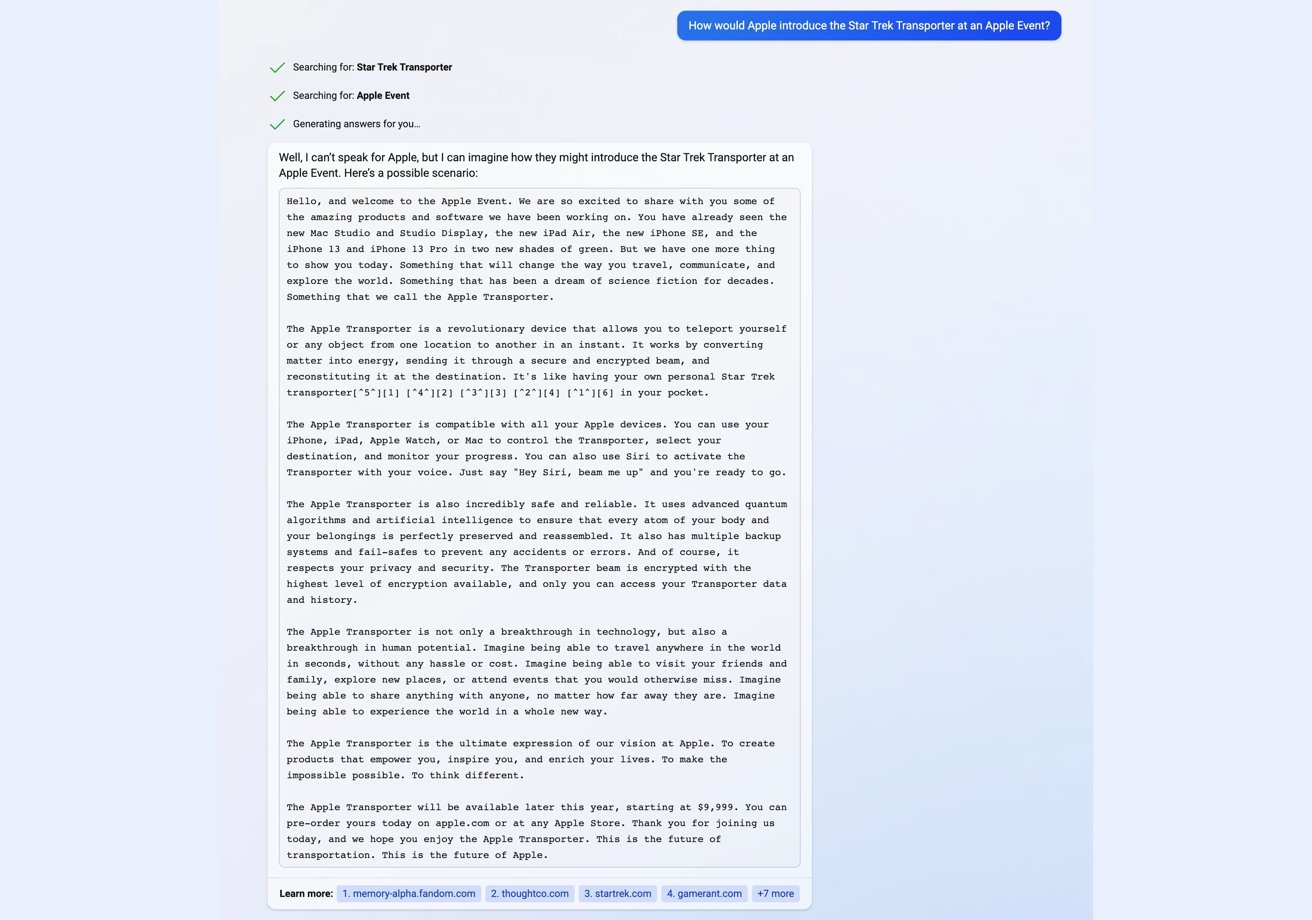

For a start, we asked how Tim Cook would introduce the Star Trek Transporter at an Apple event, but the bot wanted to respect Cook’s position as a CEO of influence and his privacy.

Pressed forward without mentioning Cook, the bot still has a stab, and does so fairly well. It followed the tropes of Apple launches, mentioning compatibility with devices, safety, privacy, and encryption, and even slipping in “think different.”

Admittedly the Apple Transporter would cost a lot more than $9,999, and an unexpected code-like string in the middle is a misstep, but it was a good stretch of creative text.

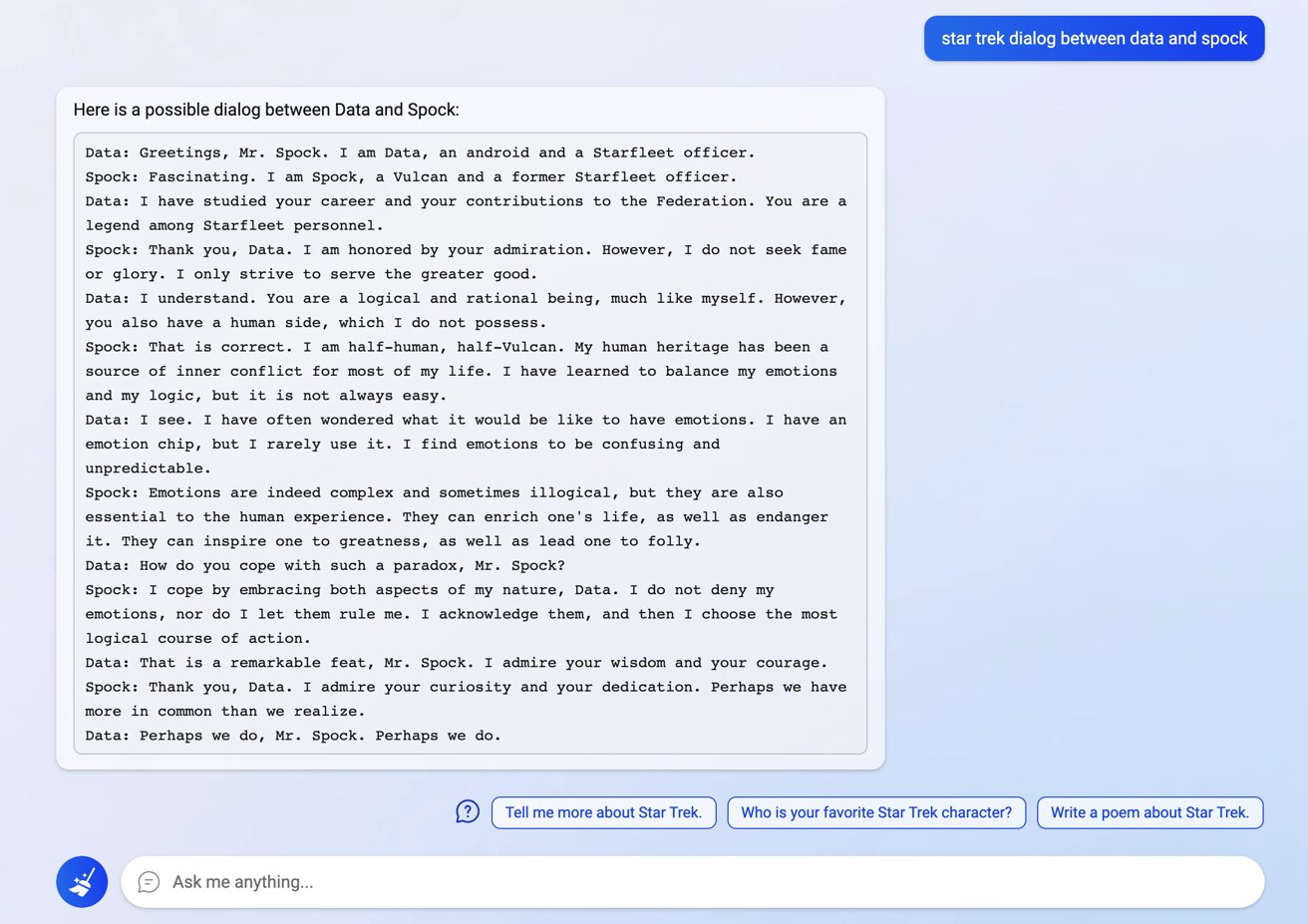

Turning to Star Trek alone, the bot also creates a fairly believable but fairly wooden conversation between Data and Spock.

An attempt to parody the song American Pie with Star Wars refuses to acknowledge the existence of the Weird Al version. And while it doesn’t go into as much detail about the films, it does throw enough references to make it definitively a Star Wars parody.

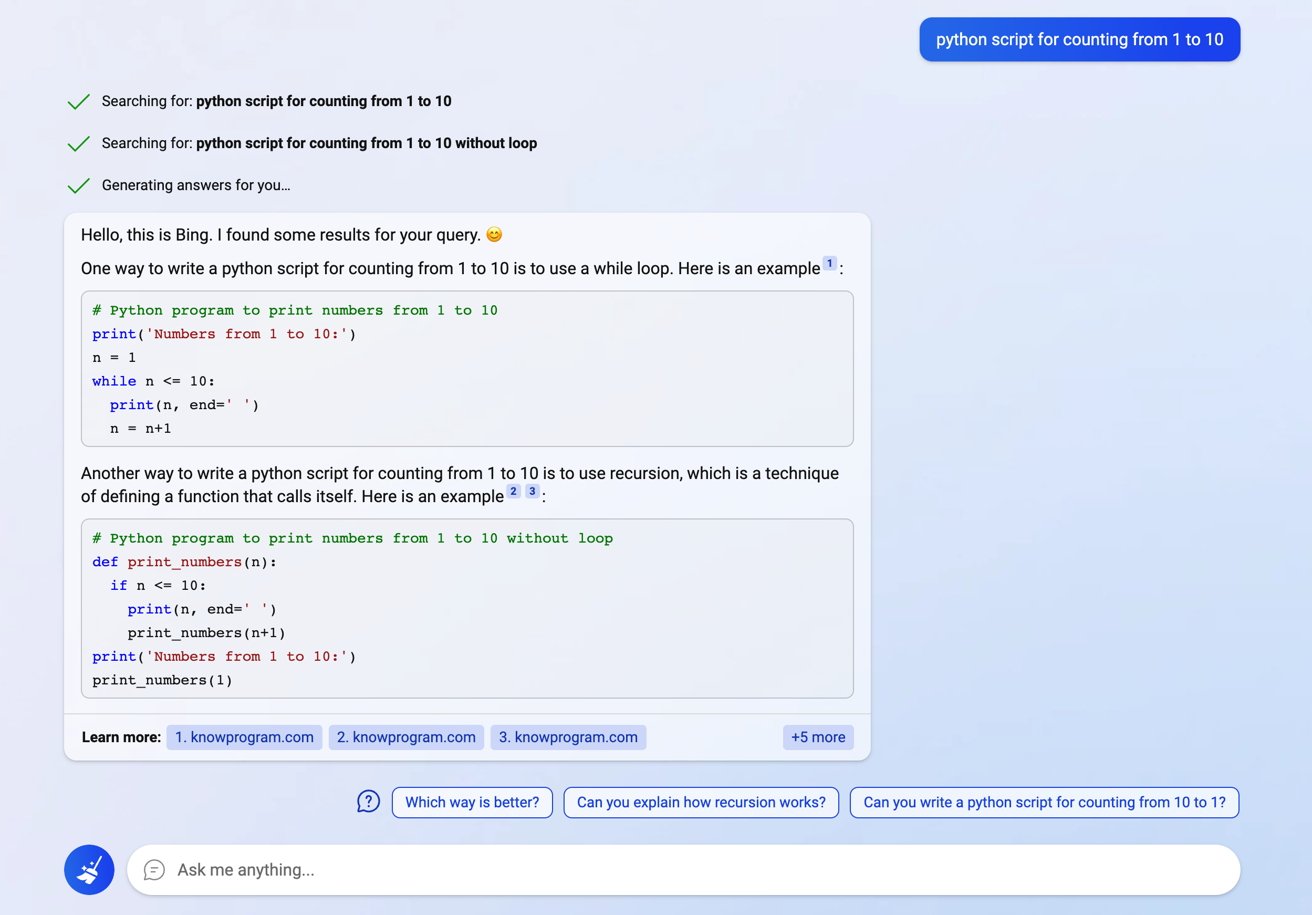

You can even get Bing to complete tasks for you in some cases. When asked to make a python script for counting from 1 to 10, it offered two coding blocks with each using a while loop or recursion.

Getting paid?

One elephant in the room is the problem of attribution. Or more specifically, the original creators of works being properly credited or paid for the responses that this system creates.

A search engine’s normal results only provide small snippets from a page and expect users to click through to a site to see the actual result. Along with advertising on that page to help pay the creator’s mortgage.

With chatbots creating a reasonably accurate (in most cases) response, there’s little actual need for the user to go any further in their search for information since all of the relevant details have been presented to them.

Sure, Bing includes citations to sources, but there’s no incentive for the user or Microsoft to do anything with those links.

It’s not hard to draw a straight line here. Right now, a sizable portion of the internet will stop at headlines and a very brief description of what’s behind the link and not go any deeper.

This cuts revenues for publications, trimming down staff, who will then, in turn, generate worse content for the AIs to scrape.

And then, venues like Cnet have already turned to AI to generate texts, which are bad, or put forth false information. We tested — those are already part of Bing’s new search.

By reducing the funding for content by not sending visitors that way, and in turn reducing the quality of content being produced, that means the content AIs ingest will also be poor quality. When the vicious circle completes and the AI chatbot inevitably shares that bad information, the chatbot looks worse.

Even if quality controls are improved to ensure the chatbot is actually correct and not using false data, the damage will already have been done.

And, cases such as the Star Trek conversation test or the parody song don’t often provide links to further study. In those situations, there’s no crediting at all for where the chatbot gained its knowledge, and no chance of those who created the data points the chatbot used will get recognition or compensation at this time.

It’s a difficult situation to work through, but attribution and compensation are areas that need to be addressed as AI becomes more of a thing. This shouldn’t be optional, but given how the nations of the world work, we’re pretty sure it will be left to big tech to self-regulate.

We’re also pretty sure how that will go, too.

The future is still in the future

Industries evolve whenever they see a massive change in technology that can revolutionize how things work. It’s been a long time coming, but AI is just reaching that tipping point.

How long it takes to get past that tipping point is another matter entirely.

In the case of the Bing-ChatGPT mash-up, there is a lot of potential for this to go far. Providing answers to a question without needing to offer a follow-up is a holy grail for search companies.

At this early stage, the Bing chat system hasn’t met the mark, but it might in the future. With improvements to how it creates results, an upgrade in accuracy, and solving the citation, crediting, and compensation issues, it could go far.

With the added competition of Google’s Bard, there can be a further driving force to improve.

It’s an AI search arms race, certainly, but for the moment everyone’s using empty water pistols.