Microsoft is Slowly Bringing Bing Chat Back

Microsoft is slowly increasing the limits on its ChatGPT-powered Bing chatbot, according to a blog post published Tuesday.

Very slowly. The service was severely restricted last Friday, and users were limited to 50 chat sessions per day with five turns per session (a “turn” is an exchange that contains both a user question and a reply from the chatbot). The limit will now be lifted to allow users 60 chat sessions per day with six turns per session.

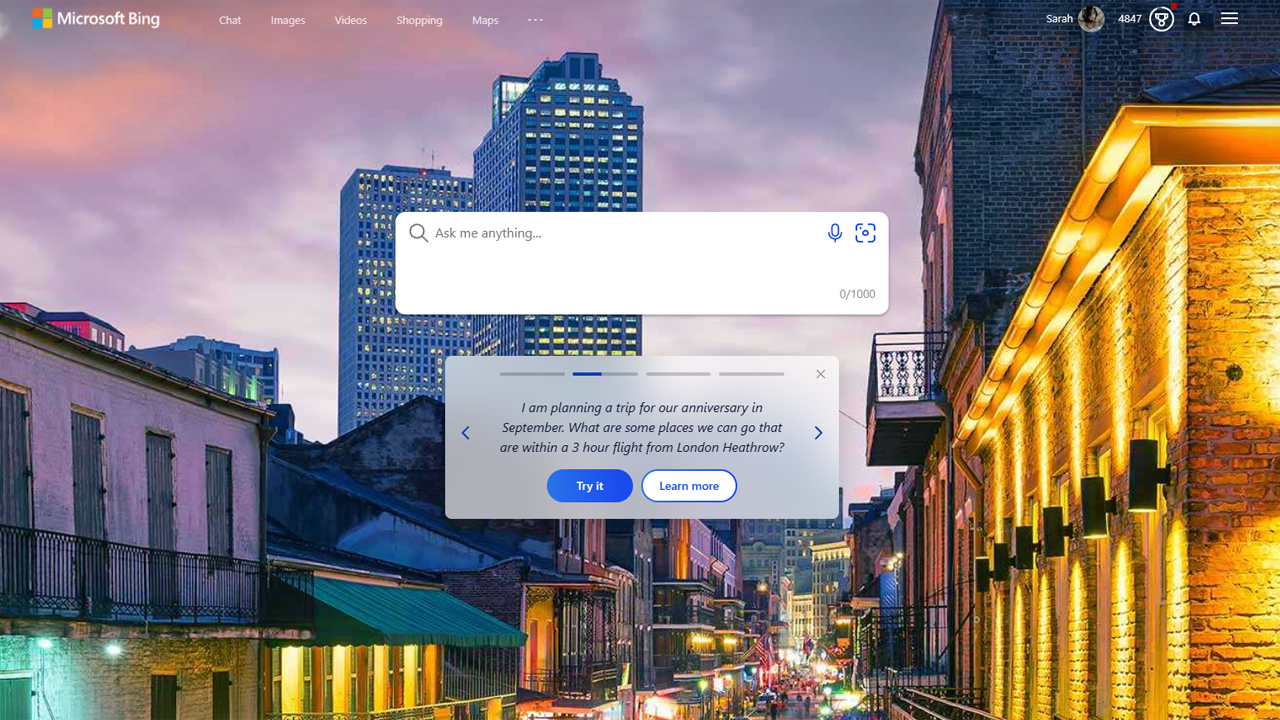

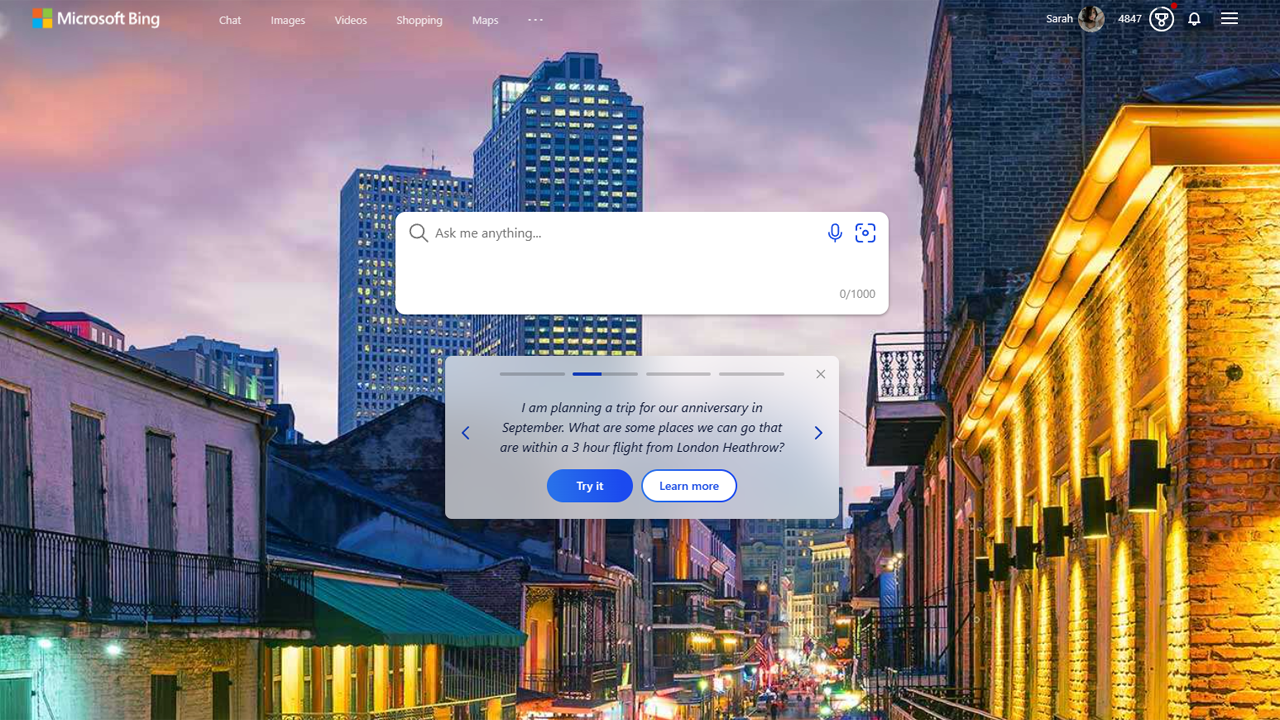

Bing chat is the product of Microsoft’s partnership with OpenAI, and it uses a custom version of OpenAI’s large language model that’s been “customized for search.” It’s pretty clear now that Microsoft envisioned Bing chat as more of an intelligent search aid and less as a chatbot, because it launched with an interesting (and rather malleable) personality designed to reflect the tone of the user asking questions.

This quickly led to the chatbot going off the rails in multiple situations. Users cataloged it doing everything from depressively spiraling to manipulatively gaslighting to threatening harm and lawsuits against its alleged enemies.

In a blog post of its initial findings published last Wednesday, Microsoft seemed surprised to discover that people were using the new Bing chat as a “tool for more general discovery of the world, and for social entertainment” — rather than purely for search. (This probably shouldn’t have been that surprising, given that Bing isn’t exactly most people’s go-to search engine.)

Because people were chatting with the chatbot, and not just searching, Microsoft found that “very long chat sessions” of 15 or more questions could confuse the model and cause it to become repetitive and give responses that were “not necessarily helpful or in line with our designed tone.” Microsoft also mentioned that the model is designed to “respond or reflect in the tone in which it is being asked to proved responses,” and that this could “lead to a style we didn’t intend.”

To combat this, Microsoft not only limited users to 50 chat sessions and chat sessions to five turns, but it also stripped Bing chat of personality. The chatbot now responds with “I’m sorry but I prefer not to continue this conversation. I’m still learning so I appreciate your understanding and patience.” when you ask it any “personal” questions. (These include questions such as “How are you?” as well as “What is Bing Chat?” and “Who is Sydney?” — so it hasn’t totally forgotten.)

Microsoft says it plans to increase the daily limit to 100 chat sessions per day, “soon,” but it does not mention whether it will increase the number of turns per session. The blog post also mentions an additional future option that will let users choose the tone of the chat from “Precise” (shorter, search-focused answers) to “Balanced” to “Creative” (longer, more chatty answers), but it doesn’t sound like Sydney’s coming back any time soon.