Adobe made an AI image generator — and says it didn’t steal artists’ work to do it

Adobe is finally launching its own AI image generator. The company is announcing a “family of creative generative AI models” today called Adobe Firefly and releasing the first two tools that take advantage of them. One of the tools works like DALL-E or Midjourney, allowing users to type in a prompt and have an image created in return. The other generates stylized text, kind of like an AI-powered WordArt.

This is a big launch for Adobe. The company sits at the center of the creative app ecosystem, and over much of the past year, it’s stayed on the sidelines while newcomers to the creative space began to offer powerful tools for creating images, videos, and sound for next to nothing. At launch, Adobe is calling Firefly a beta, and it’ll only be available through a website. But eventually, Adobe plans to tightly integrate generative AI tools with its suite of creative apps, like Photoshop, Illustrator, and Premiere.

“We’re not afraid of change, and we’re embracing this change,” says Alexandru Costin, VP of generative AI and Sensei at Adobe. “We’re bringing these capabilities right into [our] products so [customers] don’t need to know if it’s generative or not.”

“We can generate high quality content and not random brands’ and others’ IP”

Adobe is putting one big twist on its generative AI tools: it’s one of the few companies willing to discuss what data its models are trained on. And according to Adobe, everything fed to its models is either out of copyright, licensed for training, or in the Adobe Stock library, which Costin says the company has the rights to use. That’s supposed to give Adobe’s system the advantages of not pissing off artists and making its system more brand-safe. “We can generate high quality content and not random brands’ and others’ IP because our model has never seen that brand content or trademark,” Costin said.

Costin says that Adobe plans to pay artists who contribute training data, too. That won’t happen at launch, but the plan is to develop some sort of “compensation strategy” before the system comes out of beta. “We’re exploring multiple options,” Costin said.

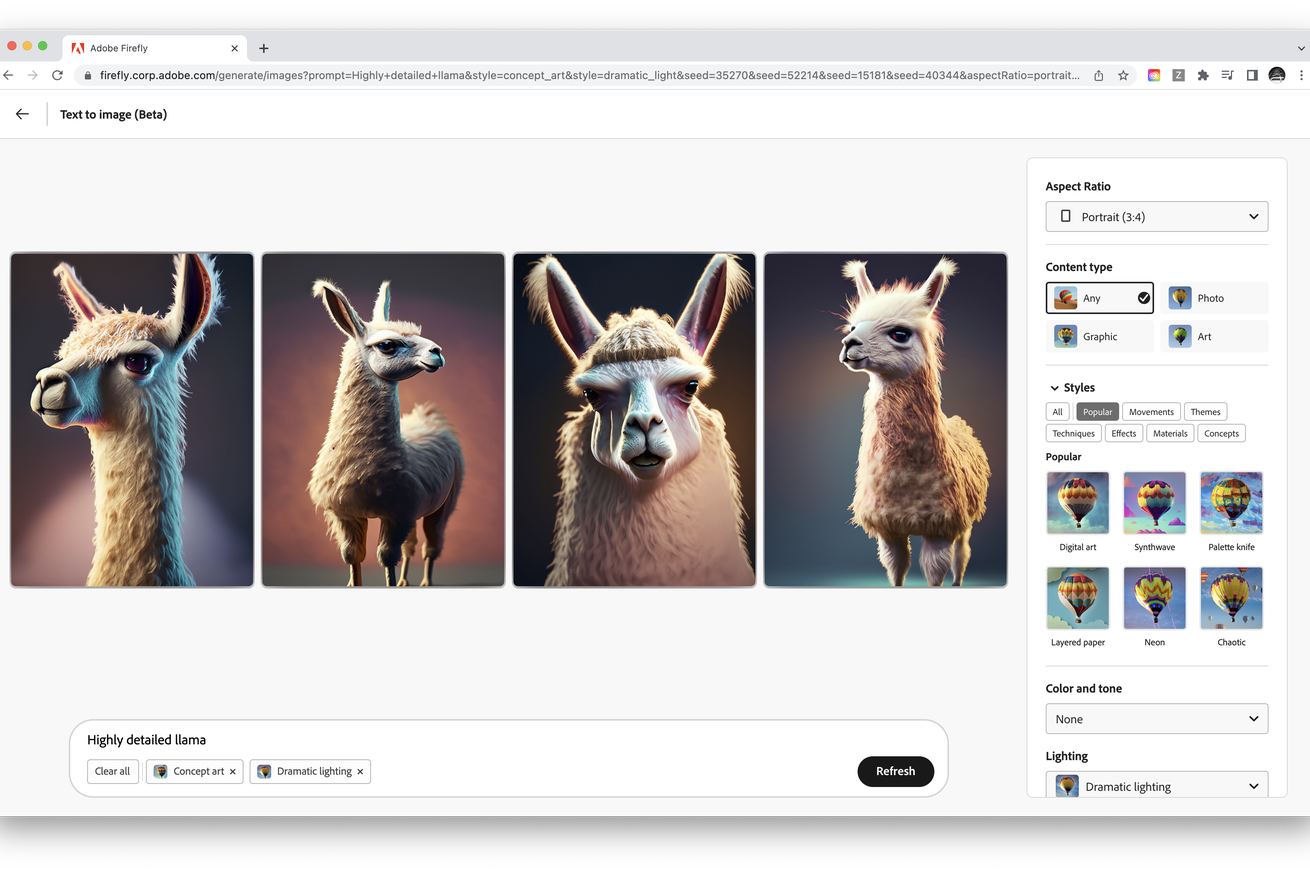

Adobe is also trying to make its AI tools easier to use than those of most competitors. Instead of needing to type in a bizarre string of descriptors to style an image, Adobe includes built-in options for art styles, lighting, and aspect ratio, which seems like a more detailed twist on what Canva is already offering. You’ll also be able to apply those effects to an image that’s already generated, rather than having to generate a new creation every time you want to update the effect. (You’ll still be able to type in custom options if you want to.) The text effect tool works in a similar way, with built-in options for the size of the effect and color of the background.

Eventually, Adobe plans to build these generative tools into its various apps and services. There’ll be AI-generated outpainting in Photoshop; Illustrator will be able to generate vector variations on hand-drawn sketches; and Premiere will let you color grade or restyle an image with just an image prompt. Adobe doesn’t have timelines on when any of these features will be released, but they’re among the examples that Costin says the company is working on.

There’s also a planned Photoshop feature that may prove to be controversial: Adobe wants to let artists train the system on their own work so that it can assist them by generating content in the artist’s personal style. That has the potential for abuse — someone could train the system on another artist’s style to clone their work — and Costin says Adobe is thinking about how to handle that. He floated the idea of comparing uploaded images to Behance, the art-sharing social network that Adobe owns, to catch potential art thieves. But for now, there’s no final decision on how it’ll be handled.

One way Adobe is hoping to stop thieves more broadly is by offering a way for artists to block AI from training on their work. It’s working on a “Do Not Train” system that will allow artists to embed the request into an image’s metadata, which could stop training systems from looking at it — if the creators respect the request. Adobe didn’t announce any other partners who have agreed to respect the Do Not Train flag so far, but Costin said Adobe is in conversations with other model creators. He declined to say which ones.

That potentially sets Adobe up to compete on a no-training standard. Stability AI, which makes the image generator Stable Diffusion, has already committed to supporting the artist opt-out requests registered via the “Have I Been Trained?” website. Artists who register their work will have it removed from the training data for Stable Diffusion’s next major release.

Firefly’s first two tools will be available in a public beta starting today. You won’t need to be a Creative Cloud subscriber to request access, but Adobe will be limiting how many people it allows into the beta.