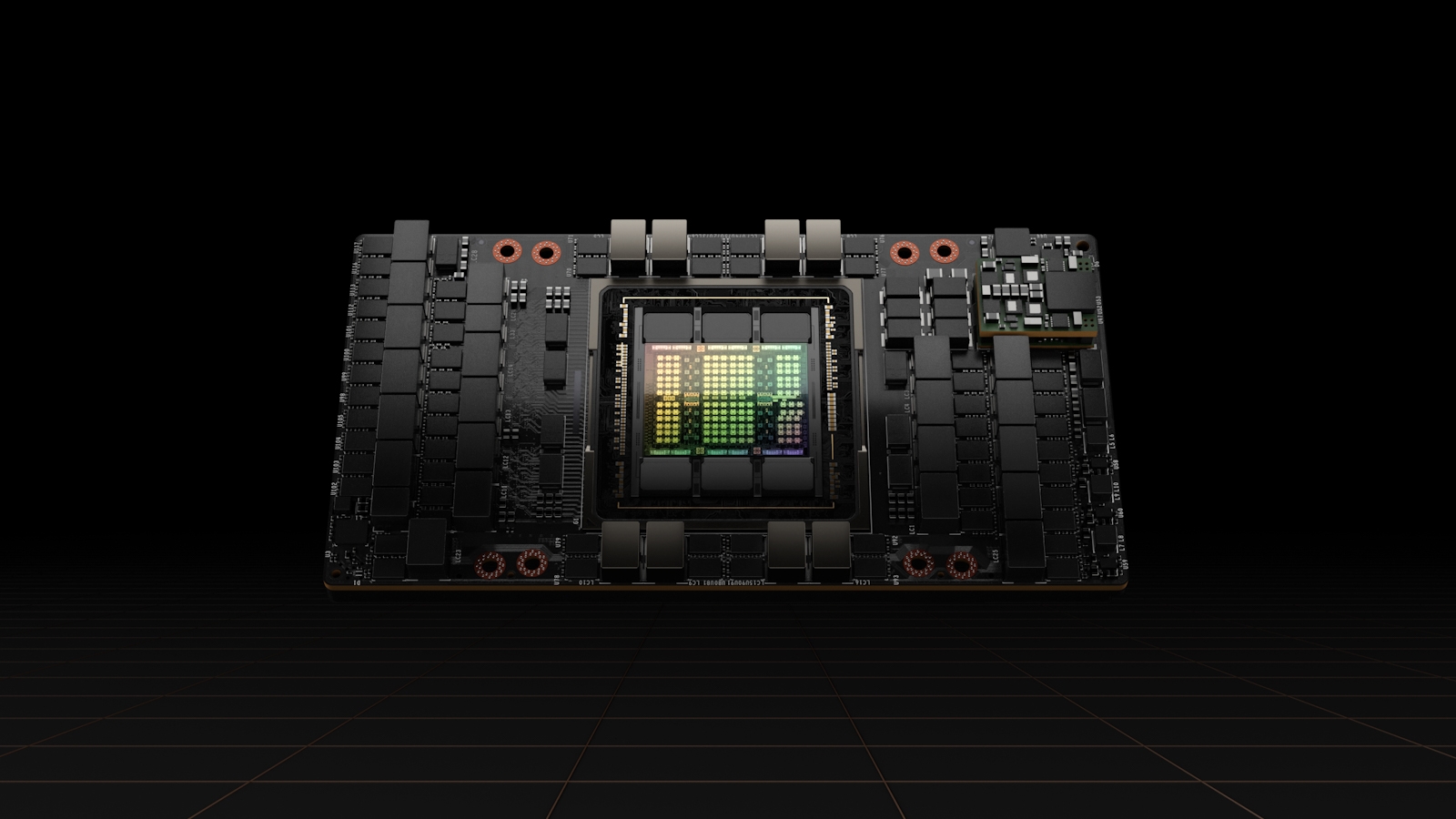

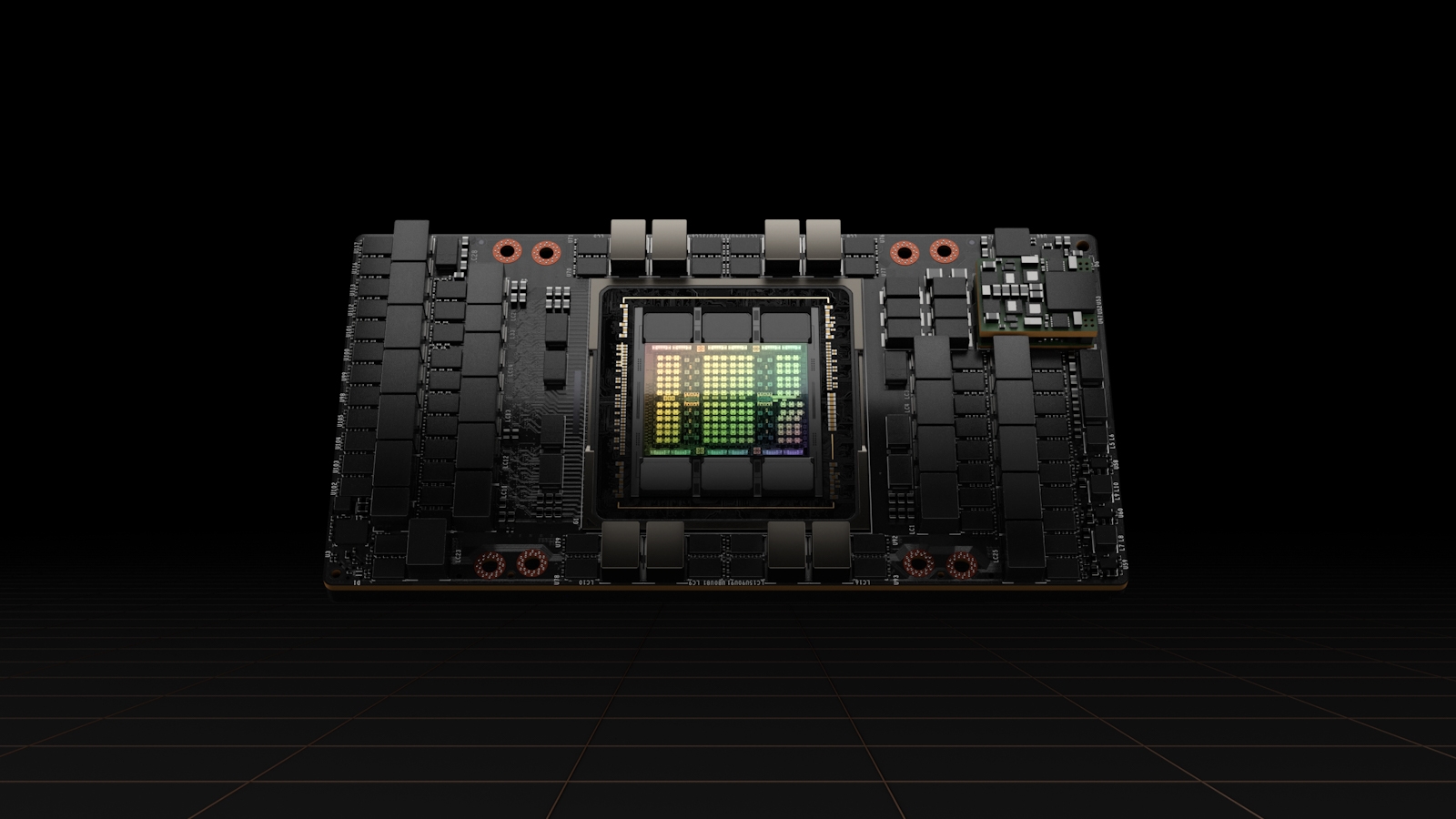

Nvidia Gimps H100 Hopper GPU to Sell as H800 to China

You won’t find Nvidia’s H100 (Hopper) GPU on the list of the best graphics cards. However, the H100’s forte lies in artificial intelligence (AI), making it a coveted GPU in the AI industry. And now that everyone is jumping on the AI bandwagon, Nvidia’s H100 has become even more popular.

Nvidia claims that the H100 delivers up to 9X faster AI training performance and up to 30X speedier inference performance than the previous A100 (Ampere). With a performance of that level, it’s easy to comprehend why everyone wants to get their hands on an H100. In addition, Reuters (opens in new tab) reported that Nvidia had modified the H100 to comply with export rules so that the chipmaker could sell the altered H100 as the H800 to China.

Last year, U.S. officials implemented several regulations to prevent Nvidia from selling its A100 and H100 GPUs to Chinese clients. The rules limited GPU exports with chip-to-chip data transfer rates below 600 GBps. Transfer speed is primordial in the AI world, where systems have to move enormous amounts of data around to train the AI models, such as ChatGPT. Hindering the chip-to-chip data transfer rate results in a significant performance hit, as the slower transfer rates increase the time it takes to transfer data, in turn increasing the training time.

With the A100, Nvidia trimmed the GPU’s 600 GBps interconnect down to 400 GBps and rebranded it as the A800 to commercialize it in the Chinese market. Nvidia is taking an identical approach to the H100.

According to Reuters’ Chinese chip industry source, Nvidia reduced the chip-to-chip data transfer rate on the H800 to approximately half of the H100. That would leave the H800 with an interconnect restricted to 300 GBps. That’s a more significant performance hit than compared to the A100 and A800, where the latter suffered from a 33% lower chip-to-chip data transfer rate. However, the H100 is substantially faster than the A100, which could be why Nvidia imposed a more severe chip-to-chip data transfer rate limit on the former.

Reuters contacted an Nvidia spokesperson to inquire about what differentiates the H800 from the H100. However, the Nvidia representative only stated that “our 800-series products are fully compliant with export control regulations.”

Nvidia already has three of the most prominent Chinese technology companies using the H800: Alibaba Group Holding, Baidu Inc, and Tencent Holdings. China has banned ChatGPT; therefore, the tech giants are competing with each other to produce a domestic ChatGPT-like model for the Chinese market. And while an H800 with half the chip-to-chip transfer rate will undoubtedly be slower than the full-fat H100, it will still not be slow. With companies potentially using thousands of Hopper GPUs, ultimately, we have to wonder if this will mean using more H800s to accomplish the same work as fewer H100s.