Elon Musk Buys Thousands of GPUs for Twitter's Generative AI Project

Despite advocating for an industry-wide halt to AI training, Elon Musk has reportedly kicked off a major artificial intelligence project within Twitter. The company has already purchased approximately 10,000 GPUs and recruited AI talent from DeepMind for the project that involves a large language model (LLM), reports Business Insider.

One source familiar with the matter stated that Musk’s AI project is still in its initial phase. However, acquiring a significant amount of additional computational power suggests his dedication towards advancing the project, as per another individual. Meanwhile, the exact purpose of the generative AI is unclear, but potential applications include improving search functionality or generating targeted advertising content.

At this point, it is unknown what exact hardware was procured by Twitter. However, Twitter has reportedly spent tens of millions of dollars on these compute GPUs despite Twitter’s ongoing financial problems, which Musk describes as an ‘unstable financial situation.’ These GPUs are expected to be deployed in one of Twitter’s two remaining data centers, with Atlanta being the most likely destination. Interestingly, Musk closed Twitter’s primary datacenter in Sacramento in late December, which obviously lowered the company’s compute capabilities.

In addition to buying GPU hardware for its generative AI project, Twitter is hiring additional engineers. Earlier this year, the company recruited Igor Babuschkin and Manuel Kroiss, engineers from AI research DeepMind, a subsidiary of Alphabet. Musk has been actively seeking talent in the AI industry to compete with OpenAI’s ChatGPT since at least February.

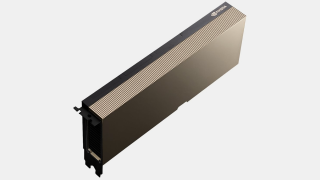

OpenAI used Nvidia’s A100 GPUs to train its ChatGPT bot and continues to use these machines to run it. By now, Nvidia has launched the successor to the A100, its H100 compute GPUs that are several times faster at around the same power. Twitter will likely use Nvidia’s Hopper H100 or similar hardware for its AI project, though we are speculating here. Considering that the company has yet to determine what its AI project will be used for, it is hard to estimate how many Hopper GPUs it may need.

When big companies like Twitter buy hardware, they buy at special rates as they procure thousands of units. Meanwhile, when purchased separately from retailers like CDW, Nvidia’s H100 boards can cost north of $10,000 per unit, which gives an idea of how much the company might have spent on hardware for its AI initiative.