16GB RTX 3070 Mod Shows Impressive Performance Gains

YouTuber Paulo Gomes recently published a video showing how he modified a customer’s RTX 3070, which used to be one of Nvidia’s best graphics cards, with 16GB of GDDR6 memory. The modification resulted in serious performance improvements in the highly memory-intensive Resident Evil 4, where the 16GB mod was performing 9x better than the 8GB version in the 1% lows. (Resulting in significantly smoother gaming performance.)

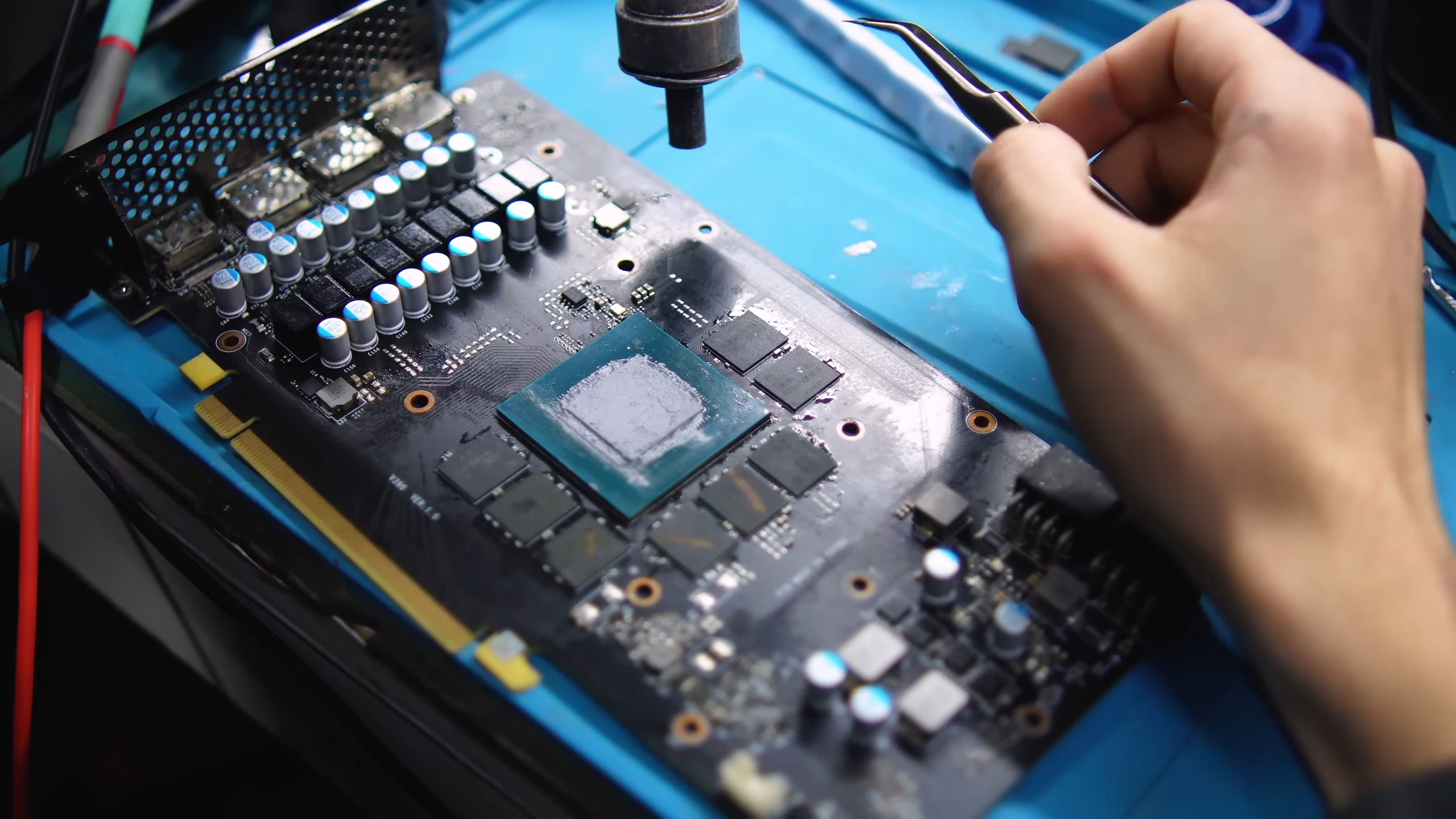

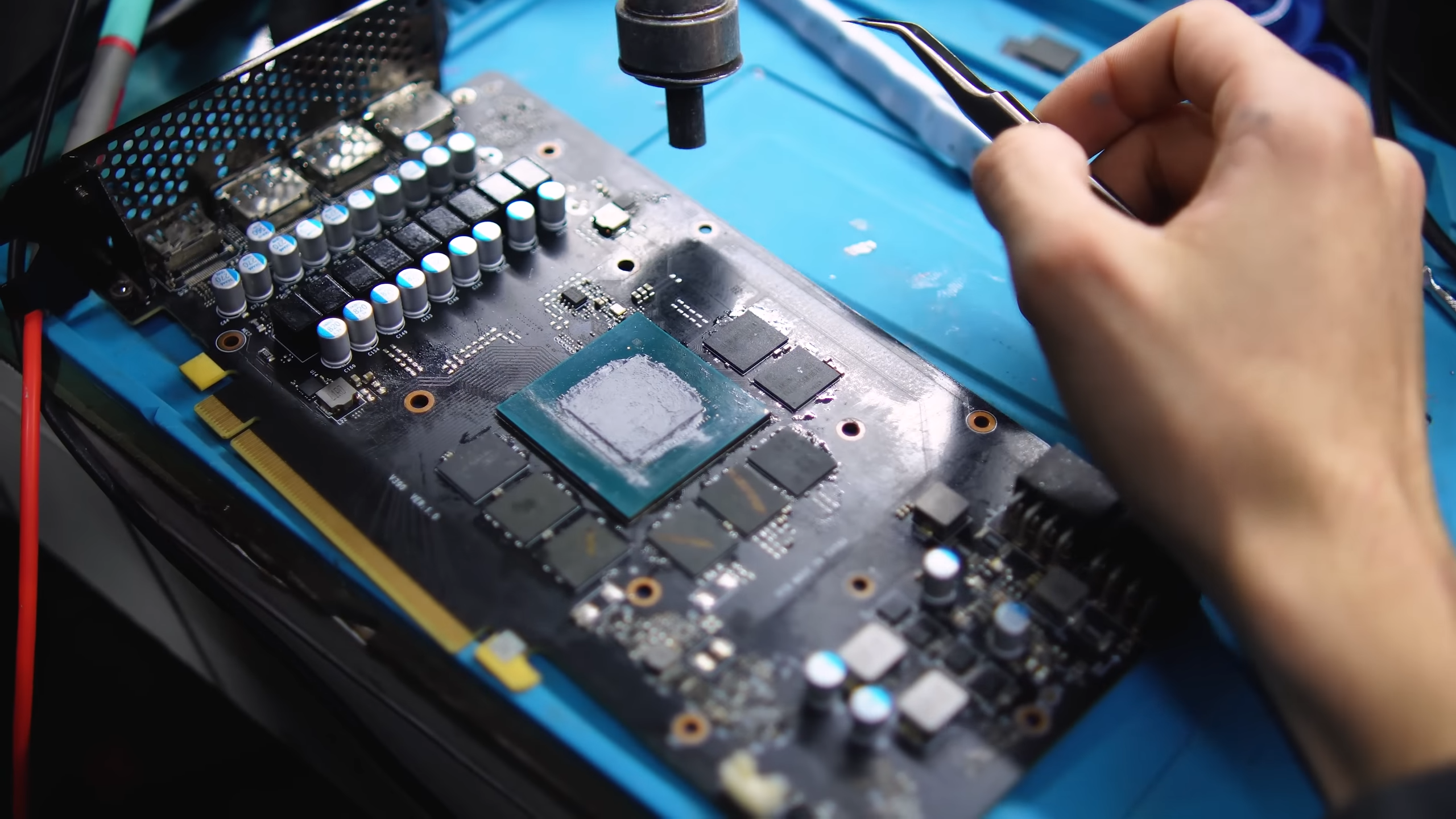

Unlike previous memory mods we’ve seen on cards like the RTX 2070, Gomes’ RTX 3070 mod required some additional PCB work to get the 16GB memory configuration working properly. The modder had to ground some of the resistors on the PCB to trick the graphics card into supporting the higher-capacity memory ICs that are required to double the VRAM capacity on the RTX 3070.

Besides this mod, the memory swap proceeded as usual. The modder removed the initial memory chips that came on the graphics card, cleaned the PCB, and installed new Samsung 2GB memory ICs that would make up the new 16GB configuration. Besides some initial flickering that was fixed by running the GPU in high-performance mode from the Nvidia Control Panel, the card performed perfectly with the new modifications.

The additional 8GB of VRAM proved to be extremely useful in boosting the RTX 3070’s performance in Resident Evil 4. The modder tested the game with both the original 8GB configuration and the modified 16GB configuration and found massive improvements in the card’s 0.1% and 1% lows. The original card was operating at just one (yes one) FPS 1% low and 0.1% FPS lows, while the 16GB card operated with 60 and 40 FPS respectively. Average frame rates also went up, from 54 to 71 FPS.

This resulted in a massive upgrade to the overall gaming experience on the 16GB RTX 3070. The substantially higher .1% and 1% lows meant that the game was barely hitching at all, and performance was buttery smooth. The 8GB conversely had massive micro stuttering issues that would last for a significant amount of time in several areas of the game.

It’s interesting to see what this mod has done for the RTX 3070, and it shows the potential of what such a GPU could do with 2023’s latest AAA titles when it’s not bottlenecked by video memory. This is an issue that has plagued Nvidia’s 8GB RTX 30 series GPUs for some time now, especially the more-powerful RTX 3070 Ti, where the 8GB frame buffer is not big enough to run 2023’s latest AAA titles smoothly at high or ultra-quality settings.