Figure’s humanoid robot takes its first steps

Toward the rear of the office, an engineer is working on a metal hand. It looks human enough — roughly the same size with four fingers and a thumb. The Figure team is methodically testing every piece of their robot skeleton before piecing them all together to watch the prototype take its first steps — something founder and CEO Brett Adcock promises is mere days away.

The hand is opening and closing — one of those tasks an engineer needs to perform ad nauseum before moving onto more complex things like mobile manipulation. “This is pretty new,” says Adcock. “We started the first five-finger wiggles last week.”

One finger in particular is getting the most action. The executive apologizes. “We had a customer in here yesterday, and we did a demo,” he explains. “It was doing that every single time, and we were like, ‘huh, that’s weird.’ It’s just flipping them off. Everybody.”

It’s best not to read too much into such things — certainly not at this early a stage. The startup is well-funded, bootstrapped with $100 million from the fortune Adcock amassed founding companies like employee marketplace Vettery and EVTOL maker Archer. Figure celebrates its one-year anniversary on May 20.

It’s made some impressive progress in that time. That’s due, in no small part, to Figure’s aggressive hiring. Many of its 51 staff members came from places like Boston Dynamics, Tesla and Apple. CTO Jerry Pratt was a research scientist at the Institute for Human and Machine Cognition for 20 years.

The first two companies continue to loom large over the project. Boston Dynamics’ Atlas is still very much the gold standard for humanoid robots. It’s pulled off extremely impressive stunts on video, and having spent some time with it at the company’s offices, I can attest to the fact that such activities are even more impressive in-person. That’s what a lot of smart people, DARPA funding and 30+ years of research will get you. The company’s work has always felt aspirational, and many former employees have gone on to help shape today’s robotics landscape.

Image Credits: Boston Dynamics

But Atlas isn’t a product. It’s an ambitious research project — something its creators have made very clear from day one. That isn’t to say its breakthroughs won’t inform future projects (they undoubtedly will), but the company has said it has no intention of commercializing the robot. Boston Dynamics has entered the industrial robotics space, but there’s a reason it’s prioritized Spot and Stretch over a general-purpose humanoid robot.

“I think there’s been this lack for 10 years, ever since the [DARPA Robotics Challenge] and the [NASA Space Robotics Challenge],” says Adcock. “The only one that’s really been pushing on it has been Boston Dynamics. Tesla coming out and saying, ‘we’re gonna really take a serious look at this commercially,’ which Boston Dynamics has not been doing, has been really positive for the industry.

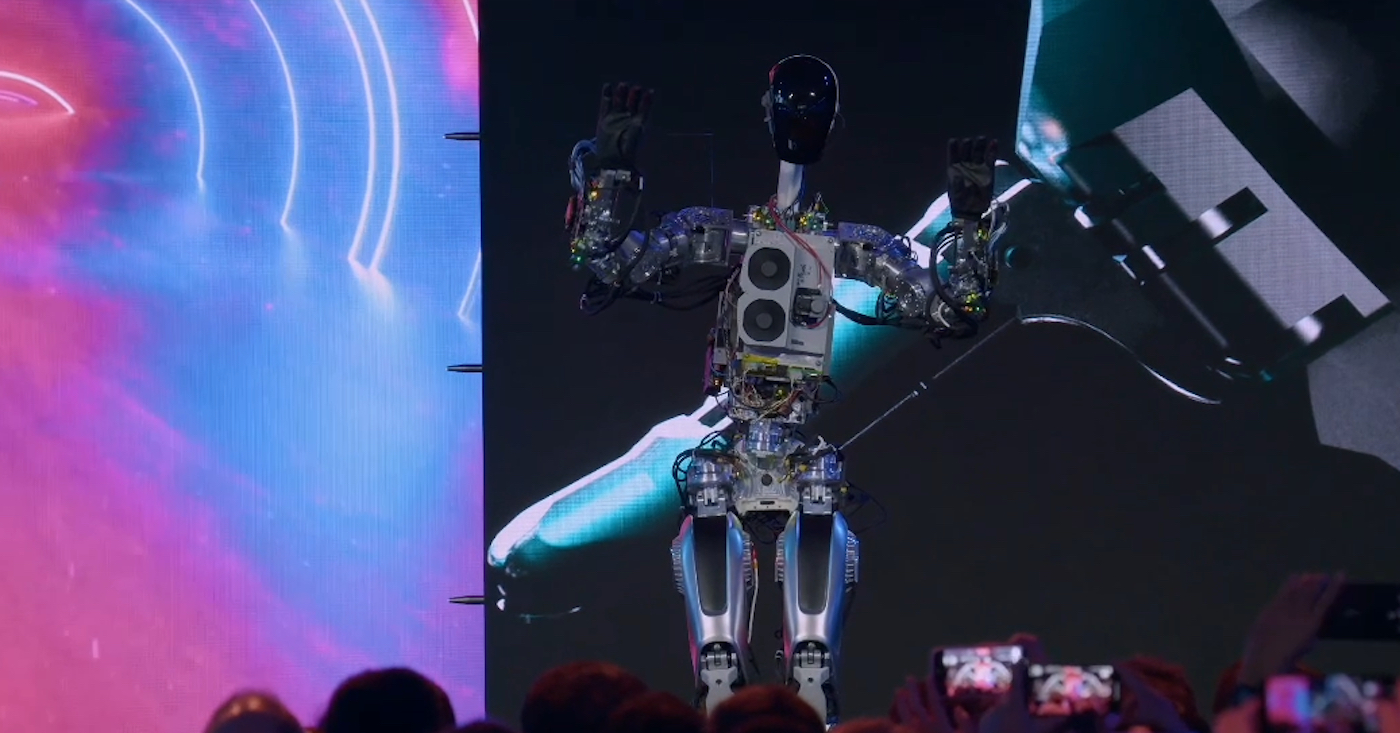

Whatever one thinks about Tesla’s ambitions (let’s just say I’ve heard very mixed things from well-positioned people in the industry), Elon Musk’s August 2021 Optimus (née Tesla Bot) announcement shook something loose in the industry. Boston Dynamics founder Marc Raibert summed things up well when he told me, “I thought that they’d gotten a lot more done than I expected, and they still have a long way to go.”

Image Credits: Tesla

Optimus didn’t legitimize the notion of an all-purpose humanoid robot, exactly, but it forced a lot of hands. It’s a risky bet revealing such an ambitious product early in the development process, but in the subsequent months, we’ve come to know several more startups that have thrust their names into the category. We broke the news of Figure’s existence back in September. In March, the company made things official, coming out of stealth with some robot renders in hand.

Vancouver-based Sanctuary has been working on several iterations of its humanoid, including Phoenix, a 5’7”, 155-pound robot that was unveiled earlier this week. The company has also been running limited pilots with partners. And then there’s 1X. The Norwegian firm made headlines in March with a $23.5 million Series A2, led by OpenAI. That the ChatGPT developer invested so much in a humanoid is a big vote of confidence in the future intersection between robots and generative AI.

The sudden propagation of competitors has caused some confusion, not helped by the fact that there seems to be a good deal of convergent evolution among product designs. One major news site recently ran a story headlined, “OpenAI and Figure develop terrifyingly creepy humanoid robots for the workforce,” confusing 1X for Figure, which continues to be the source of some annoyance. “Terrifyingly creepy,” meanwhile, is a fairly standard descriptor for robots from non-roboticists, perhaps pointing to a long road toward more mainstream acceptance.

What’s remarkable about the Figure office is how unremarkable it is from the outside. It’s a 30,000-square-foot space located amongst office parks in a relatively sparse part of Sunnyvale (insofar as anything in the South Bay can be meaningfully labeled “sparse”), located within a 10-, 15- and 20-minute drive of Meta, Google and Apple, respectively. It’s a long, white building, with no visible signage, owing to the permitting involved in adding such things.

Inside, it has that new office smell. There are still a number of empty desks, an indicator of future growth — though not nearly as aggressive as it experienced a year ago. “We’re hiring very carefully,” says Adcock. “We’ve scaled the team to about where we probably need to be. Our headcount is pretty strong for the size of the company. I don’t think we ever want to turn away the right person for the job.”

Image Credits: Figure

There is a lot plate juggling at the various workstations. Employees are focused on various different aspects concurrently, which will ultimately all feed into the same bipedal system. It might feel like the blind men and the elephant parable, were the space not peppered with reminders about where this is all heading.

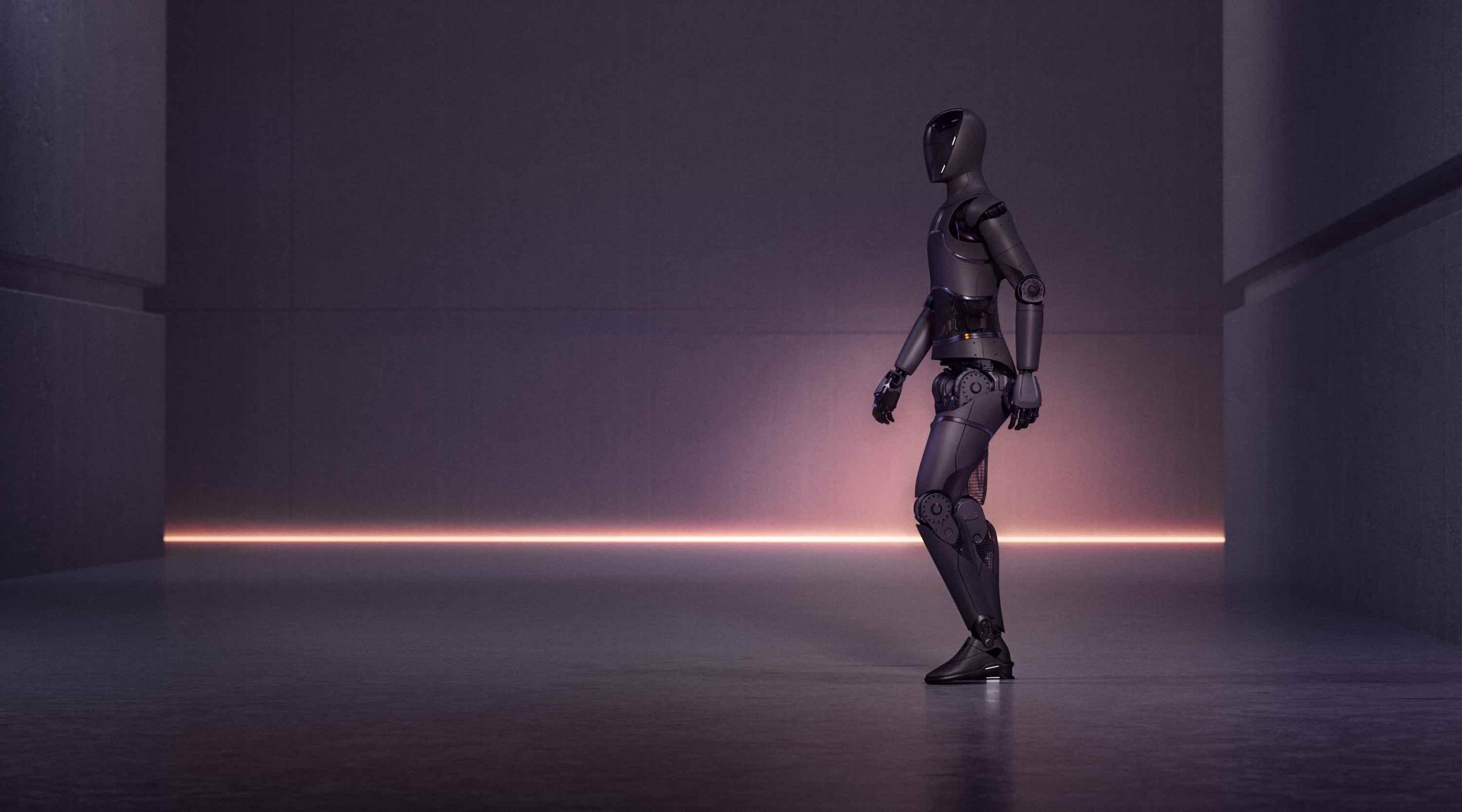

Along the streetside wall hangs a series of posters with iterations similar to renders Figure has already shared with the world. It’s an all black body, topped off with a black, Daft Punk-style helmet that permeates many humanoid robot designs. While those in the industry bristle at the dystopian sci-fi references that crop up any time a robot is unveiled (Black Mirror, Terminator, you’re all hilarious), it’s not difficult to see why such systems can give bystanders pause.

These are sleek, futuristic designs that feel like an homage to iconic science fiction androids like the variety found in the Star Wars universe. They exist on the ridges of an uncanny valley that will only deepen as people continue to anthropomorphize these machines. Plenty have warned against it. When I spoke to Joanna Bryson last week, she referenced her best-known paper, Robots Should Be Slaves, in which she writes:

The principal question is whether robots should be considered strictly as servants — as objects subordinate to our own goals that are built with the intention of improving our lives. Others in this volume argue that artificial companions should play roles more often reserved for a friend or peer. My argument is this: given the inevitability of our ownership of robots, neglecting that they are essentially in our service would be unhealthy and inefficient. More importantly, it invites inappropriate decisions such as misassignations of responsibility or misappropriations of resources.

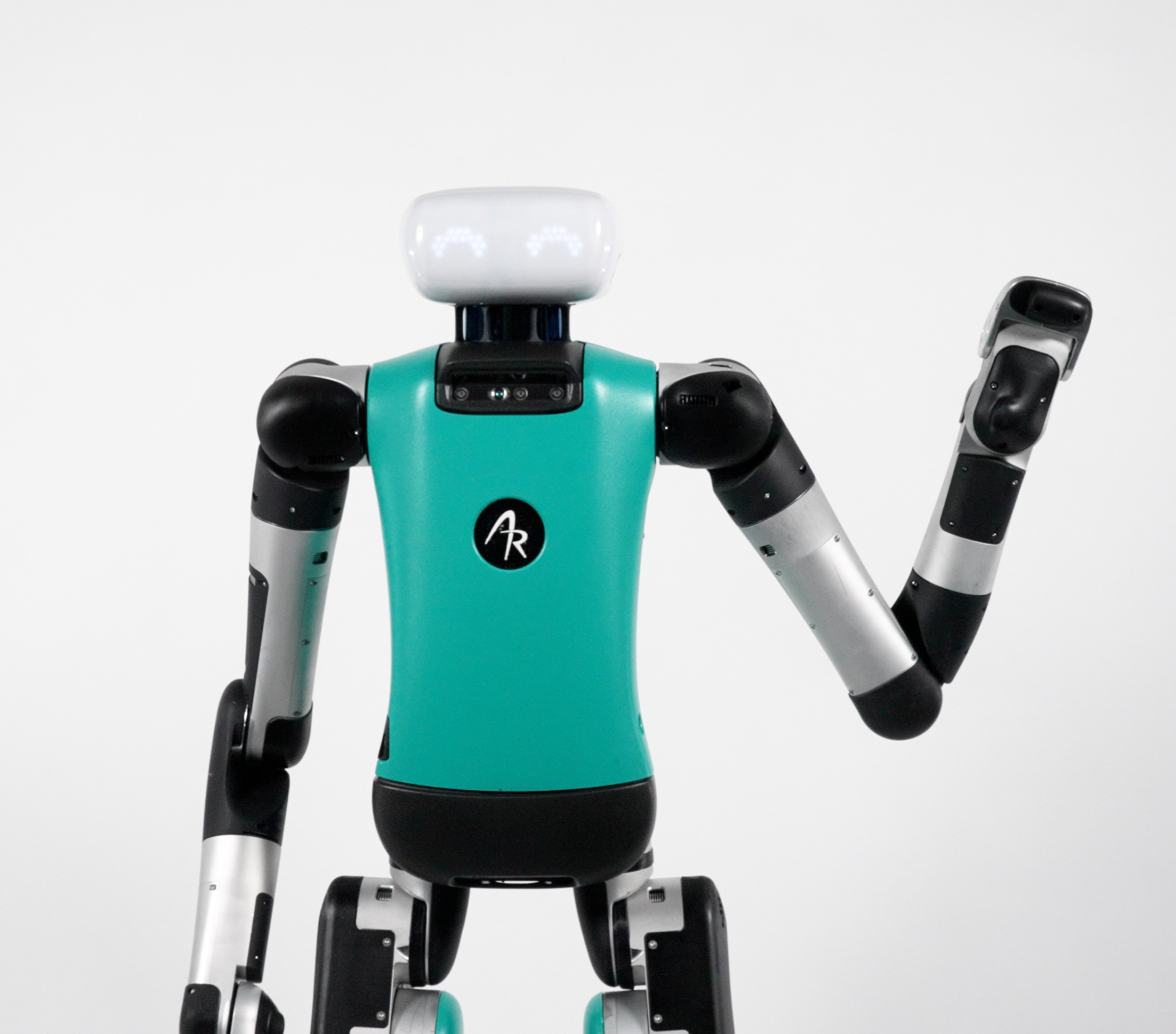

Image Credits: Agility Robotics

Looking at Agility’s Digit robot, you really have to squint to find much resembling a human or even an animal. But watch it get knocked over, and you might feel that pit in your stomach. It’s a distinct feeling from, say, watching someone drop a computer. Heck, iRobot CEO Colin Angle once pointed out to me peoples’ tendencies to dress up and name their Roombas. Many I’ve spoken with who work on the industrial side of things tell me that humans often name their robot co-workers, as well.

The fact is, however, that the Figure 01 will largely operate out of sight for most of us to begin with. The most important thing is how human workers perceive it. Granted, if and when the company starts piloting these things in the real world, there will invariably be a flurry of news articles stirring these emotions all over again, but beginning with industry seems like a valid way to ease these machines into daily life.

Image Credits: Figure

The panels — or the armor, if you will — covering the robot’s metal skeleton serves twin purposes. The primary is simply aesthetic. The second is safety — a big concern when industrial robots work alongside people. In this specific instance, there are potential hazards. Stick a finger in the wrong spot and it becomes an issue. Best to just cover them up and be done with it. The panels outfitting the prototype on display are currently made of 3D-printed plastic.

It’s hard to say how closely the prototype will hew to the renders once the wiring is in place and the panels are on board. It’s certainly skinnier than other humanoids I’ve seen and, at very least it has the potential to be closer to the aspiration images than some of the competition. It’s a fairly unique approach in a space where people generally build a big, clunky thing to start with, before pairing things down. Figure’s approach would be more like if Apple made MacBook body before building its first computer.

It’s an imperfect analogy, of course. For one thing, we now have extremely advanced simulations capable of running hundreds or thousands of tests within seconds. At the end of the day, there’s no replacement for good, old fashioned real world testing, but you can learn a lot about a system prior to deployment.

A lot of conversations led to the helmet in the renders, as well as the physical mockup in front of me. Eyes have, understandably, long been the go-to. There’s something about eyes that absorb some of that initial shock. We’re hardwired to connect with eyes, and when we don’t see any, suddenly the pareidolia kicks in.

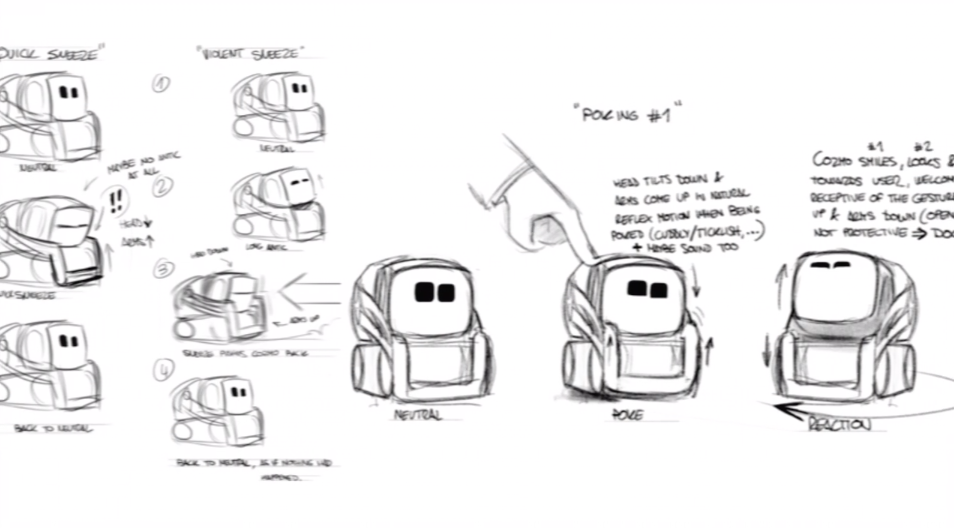

Image Credits: Anki

Eyes are the key to robots like Keepon, which are designed for children on the autism spectrum, and toys like Cozmo, for which the Anki team recruited former Pixar and DreamWorks animators. When robots don’t have eyes, people often take it upon themselves to add some. There are currently 1,223 results when you search “Roomba eyes” on Etsy. Eyes are also a big part of the reason Agility gave Digit a head. The eyes turn in a direction before the rest of the robot, in order to help avoid accidental collisions with people.

While Figure has taking a largely “if it ain’t broke, don’t fix it” approach to the subject of human evolution (Adcock and I had, for instance, a long conversation about the efficacy of the human hand), after much discussion, it moved away from eyes, in favor of something more complex.

“We’re working on some of the [A Human-Machine Interface] right now, so this is all placeholder tech,” he says, gesturing to one of the panel-covered robots on display. “We wanted to understand if we could build screens that are flexible and deformable. This is a new OLED screen we just got. It’s flexible and deformable. It’s like a piece of paper.”

Adcock credits David McCall, a former Rivian employee who now serves as Figure’s principal industrial designer, with progress on the display front. Instead of eyes, the display will feature text as a quick way to convey important information to human colleagues on the fly.

“You would basically want to convey all the information that’s going on from the face screen,” he adds. “If you prompt it, you know what’s going on. If you prompt it, you don’t want the robot to have a dead stare, like is it on or off? Is it going to run over me?”

Iterating the robot has been a long process of examining it piece by piece. Often times human elements make the most sense for a robot designed to interact with human environments, but technological advances can sometimes trump them.

“We were trying out a bunch of different things,” says Adcock. “We spent a lot of time asking whether we need a head or not, and it looked really weird without a head. Actually, we have a lot of sensors not in the head. They’re all cameras. Some of our 5G and Wi-Fi is sitting up there. The head has a lot of sensors that we need, because the rest of the torso is literally filled with batteries and computing.”

Image Credits: Figure

Rather than one massive battery, the torso is stuffed with individual 2170 battery cells — the sort found on EVs like Tesla’s. During our conversation, an employee whizzes by on a skateboard carrying a large battery cluster destined for one of the systems in the back.

Cameras are located on various parts of the robot, including once on the waste and rear, the former of which is designed to give the system a glimpse at what’s in front of it when the box it’s holding is occluding its vision. Next to the panel-covered mockup is one of five all-metal skeletons on site. Some of the pieces are off-the-shelf components created for robotic or automotive applications, like the cross roller bearings manufactured specifically for industrial robotic arms. Increasingly, however, Figure is creating its own parts. In fact, the company opened a small machine shop in its office expressly for this purpose.

In one room are a half-dozen or so industrial metal machining systems. In the other is a series of desktop and industrial 3D printers for prototyping. The quick iterating is performed by Figure’s 15-person hardware team, which is largely comprised of former Boston Dynamics employees.

The office’s centerpiece is a large cage enclosed in plexiglass panels. Inside are mockups of an industrial setting. It’s not quite the 1-to-1 factory/warehouse simulation I’ve seen visiting locations like Fetch’s San Jose offices — it’s more like the stage play version. There are shelves, pallets and conveyer belts, each representing the initial jobs with which the system will eventually be tasked. The space serves the dual role of testing bed for the system and a kind of showroom where Figure can demonstrate the working robot for potential customers and investors.

While this has all come together extremely fast, it’s worth reiterating that it’s all still very much early stages. In fact, that’s why there are only offices photos to accompany this piece, with the robots themselves cleared out. Figure is being very deliberate in what it chooses to show the world. The systems on display are the A/Alpha build of what will ultimately become the Figure 01. The B build is expected to be done by July and up and running in the offices by September.

Image Credits: Figure

“We just did bench testing [with the alpha unit] for the last 60 days,” says Adcock. “Lower body, upper body, arms, everything else. On Tuesday, the bottom half and the top half came together. It’s fully built here. We’ve got a full system, and we’re gonna try to do first walking […] before May 20, our one-year anniversary.”

One system currently sits suspended by a gantry that will ultimately be used to support the robot during those first steps. The analogy of a baby learning to walk is almost too obvious to speak aloud. It’s also a bit misleading, as this baby is a full-grown adult. Adcock pulls out his phone to show me some early testing, in which an external system is used to make the legs effectively run in place. Ultimately, however, the system will never travel faster than a walk.

Even tethered walks are hard. Untethered walks are, naturally, much harder. Making enough untethered walks a day to justify the operating costs versus a human, which are then repeatable over the course of the robot’s life, probably feels downright impossible most days. And all of that is just one of a million pieces that have to come together perfectly to make the product make sense. That no one has built a reliable general purpose humanoid robot is not for lack of trying, smarts or funding.

Image Credits: Figure

In many ways, the general purpose bit feels even harder than the humanoid bit. Sure, there are countless things can (and invariably will) go wrong on the hardware side during testing, but there’s a broader question of whether all of the proper elements are in place to make a system like this sufficiently smart and adaptable. It needs to learn, grow and problem solve on the fly. Robots today — from the cheapest robot vacuum to the most complex industrial system — are designed to do one specific task well until it physically can’t anymore.

Adcock has assembled a team with impressive resumes, and the whole of humanoid robot research feels exciting. But the efficacy, viability and success of such a project needs to be assessed at every step of what can feel like an impossibly long road. Questions and critiques surrounding these kinds of projects are more about practical concern than cynicism, schadenfreude or subterfuge.

While the whole of this is an ambitious undertaking, however, Figure appears to be targeting markets with more intentionality than Musk’s initial pitch. The billionaire promised a “general-purpose” robot in the truest sense of the term. Something that could work in a factory, then come home, do some shopping and help your older relatives live on their own.

Starting with an industrial focus, on the other hand, makes a lot more sense. First there’s the money. Even with the planned RaaS (robotics as a service) subscription model in place, it’s hard to imagine a system that isn’t prohibitively expensive for all but the most wealthy. Corporations, however, have far deeper pockets. One of the other key pieces is the fact that your average warehouse and factory are far more structured than a home. Navigating in that environment presents a slew of new navigation and safety challenges.

None of that is to say that such things aren’t on the roadmap.

Image Credits: Figure

“We would like to build this out for the big game,” says Adcock. “The next 20 or 30 years. That would start here, doing basic stuff in the world, and then from there getting into more things through an over-the-air software update. So, it can load a truck. It might be palletizing and restocking shelves and cleaning floors. Then it can ultimately go into manufacturing into retail, and then over time — maybe 15 years from now — it can care for the elderly and do things [other things] that are important.”

It’s a project bursting at the seams with ambition, but it’s also entirely too early to say anything definitively. The debate between purpose-built systems and general purpose robots will rage on for some time. Ditto for the real-world efficacy of reengineering a human. It’s true that we’ve built our environment to accommodate the human form factor, but it’s also fair to say that we haven’t evolved to be the most efficient creatures on Earth.

Regardless of how this plays out, however, it’s fascinating watching these first baby steps into a bigger wold.