Intel CEO: ‘We’re going to build AI into every platform we build’

Intel CEO Pat Gelsinger was very bullish on AI during the company’s Q2 2023 earnings call — telling investors that Intel plans to “build AI into every product that we build.”

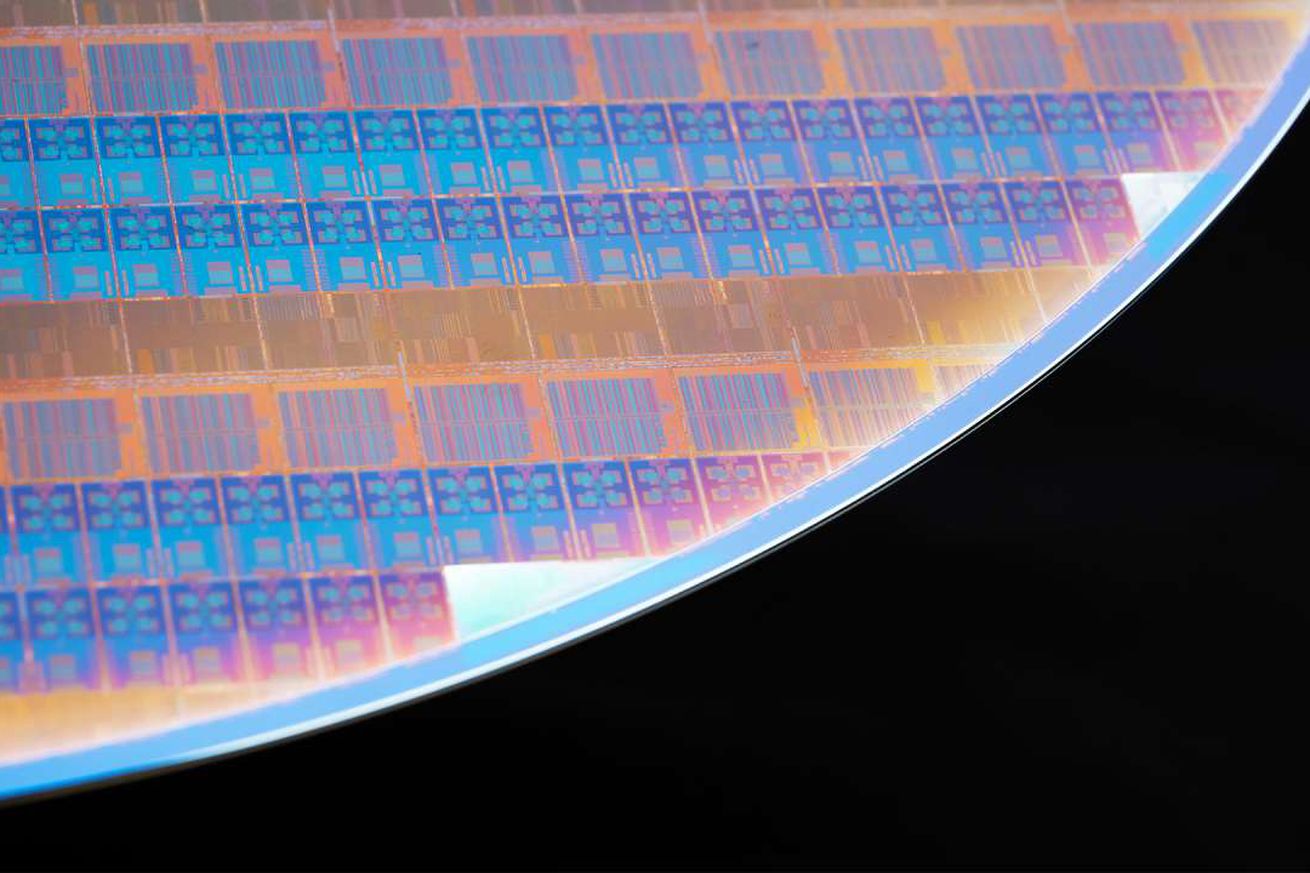

Later this year, Intel will ship Meteor Lake, its first consumer chip with a built-in neural processor for machine learning tasks. (AMD recently did the same, following Apple and Qualcomm.)

But while Intel previously suggested to us that only its premium new Ultra chips might have those AI coprocessors, it sounds like Gelsinger expects AI will eventually be in everything Intel sells.

Gelsinger often likes to talk up the “four superpowers” or “five superpowers” of technology companies, which originally included both AI and cloud, but today, he’s suggesting that AI and cloud don’t necessarily go hand in hand.

Gelsinger:

Today, you’re starting to see that people are going to the cloud and goofing around with ChatGPT writing a research paper and, you know, that’s like super cool, right? And kids are of course simplifying their homework assignments that way, but you’re not going to do that for every client — because becoming AI enabled, it must be done on the client for that to occur, right? You can’t go to the cloud. You can’t round trip to the cloud.

All of the new effects: real-time language translation in your zoom calls, real-time transcription, automation inferencing, relevance portraying, generated content and gaming environments, real-time creator environments through Adobe and others that are doing those as part of the client, new productivity tools — being able to do local legal brief generations on a clients, one after the other, right? Across every aspect of consumer, developer and enterprise efficiency use cases, we see that there’s going to be a raft of AI enablement and those will be client-centered. Those will also be at the edge.

You can’t round trip to the cloud. You don’t have the latency, the bandwidth, or the cost structure to round trip, say, inferencing at a local convenience store to the cloud. It will all happen at the edge and at the client.

“AI is going to be in every hearing aid in the future, including mine,” he said at a different point in the call. “Whether it’s a client, whether it’s an edge platform for retail and manufacturing and industrial use cases, whether it’s an enterprise data center, they’re not going to stand up a dedicated 10-megawatt farm.”

On the one hand, of course Intel’s CEO would say this. It’s Nvidia, not Intel, which makes the kind of chips that power the AI cloud. Nvidia’s the one that rocketed to a $1 trillion market cap because it sold the right kind of shovels for the AI gold rush. Intel needs to find its own way in.

But on the other hand, it’s true that not everyone wants everything in the cloud — including cloud provider Microsoft, which still makes a substantial chunk of its money selling licenses for Windows PCs.

This January, Windows boss Panos Panay attended the launch of AMD’s chip with a built-in neural processor to tease that “AI is going to reinvent how you do everything on Windows,” and those weren’t idle words. My colleague Tom now believes Microsoft’s new AI-powered Copilot will change Office documents forever following that tool’s reveal in March, and Copilot is also being integrated into Windows itself. But Copilot is currently powered by the cloud and will be a $30 monthly subscription per user.

The next version of Windows is the one to watch. A leak has already suggested that Intel’s Meteor Lake — and its built-in neural engine — is pointed at Windows 12.