Hands on with Apple Vision Pro in the wild

There are a few Apple Vision Pro headsets out in the wild, and recently we got a chance to use one of them. Here’s what we thought.

I’ve known this day was coming for a long time. Rumors have been floating around about this headset for about five years. ARKit was a clear herald that something like the Apple Vision Pro was imminent.

We got a brief demo of the Apple Vision Pro at WWDC. Thanks to a fan of AppleInsider, I recently got an opportunity to spend about two hours with a unit.

If I was allowed to take pictures — and I wasn’t — it would be obvious with whom and where I used the headset. So, regretfully, words will have to suffice.

Not my first AR and VR rodeo

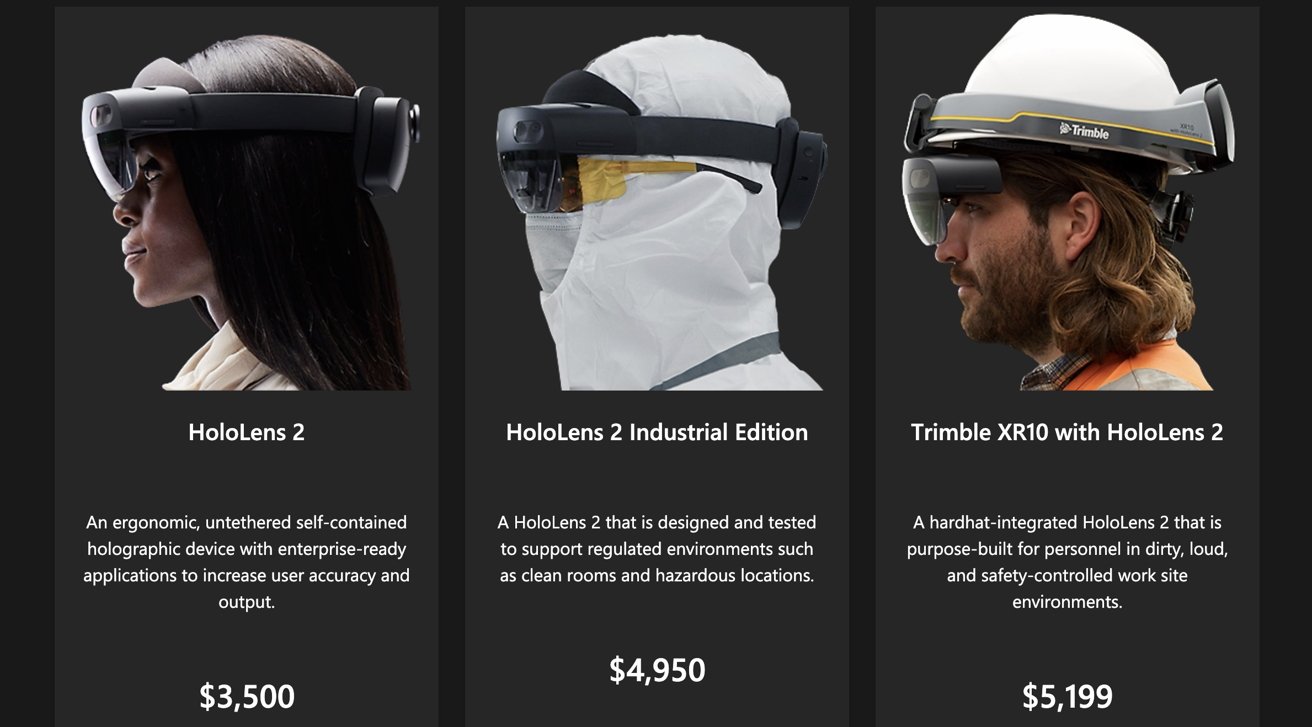

I’ve used both the Valve Index and HTC Vive a great deal as I own both. I have experience with every Oculus headset that Facebook/Meta has released, and spent some time with Microsoft’s now mostly-defunct HoloLens.

Apple’s headset is closest to HoloLens in intent, pricing, and use cases, as far as I can tell right now — but developers will ultimately get the final say.

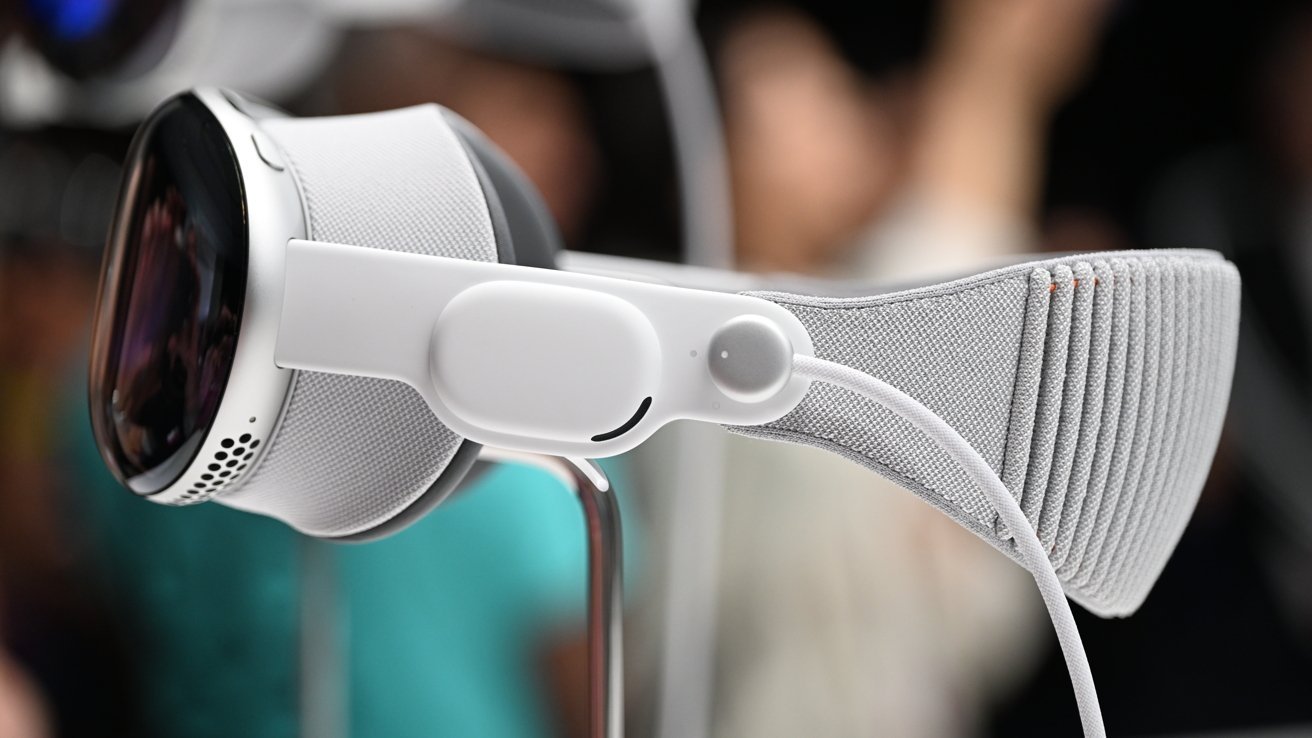

Apple’s design ethos is clear in the headset. It’s using fabric not dissimilar in feel to AirPods Max ear pads, blended with modern iPhone design. The curved glass is a marvel, and engineered precisely for the cameras and infrared projectors that live underneath it.

Setup was easy. The iPhone does most of the on-boarding in a manner similar to an initial setup of Face ID. A separate step with a vertical head-move scans your ears to tailor spatial audio.

I don’t wear glasses or contacts, nor were there lenses available to me to test-fit inside the headset. Looking inside the headset, it’s clear where the lenses will magnetically attach.

It’s very early, but I would very much like Apple to discuss now how much this will cost. I would also like to know if there will be special lenses available for users with vision cuts from a stroke, hemianopsia, or more dramatic vision issues than just not having 20/20 vision.

Apple Vision Pro – field of vision

The Apple Vision Pro has a wider vertical field of view than anything else I’ve used. There’s no good way to scientifically measure this at the moment, but it feels like there’s almost twice the vertical field of vision on Apple Vision Pro, versus HoloLens

The horizontal field of view isn’t edge-to-edge. There is an area of black nothingness at the edges of peripheral vision. This is about the same as the other headsets I’ve used — but also not quite what Apple is trying to demonstrate in materials about the headset.

In the enterprise cases that Apple has put forth, this allows for more natural eye movement to look up just a little, versus moving your entire head and keeping your eyes fixed forward which is more or less what HoloLens required.

Video passthrough on Apple Vision Pro is crisp and clear

The part I’ve been most skeptical about is how well the Apple Vision Pro passes through the surroundings to the user. The short version is that it does it very well, with crisp and clear images most of the time.

It falls down a little, and some clarity is lost when objects pass through a shadow, or the lighting situation suddenly changes. However, it takes some conscious thought to “see” that there’s missing information if the subject is an object or person you know well as your brain fills in some of the gaps.

In that latter scenario where the lighting changes, it adapts quickly to the change.

Interestingly, when in use in room lighting, the internal screens are about the same brightness as the environment. When you take the headset off, there’s almost no pupil adjustment time required.

However, when a room is brightly lit, the screens are dimmer, and there’s a brief period of pupillary adjustment when you go outside into direct sun.

It’s not clear if this will change as the software matures. We’ll see in the fullness of time.

The inverse of this, EyeSight, where your eyes are projected on the outside of the device was not available for me to test.

Eye tracking and gesture controls

The main interface of Apple’s Vision Pro headset is based on navigating using eye tracking and gestures, with the system needing to determine what the user is looking at specifically as quickly as possible. It does this with infrared projection, with technology similar to the dot projector in Face ID.

And, the company appears to have stuck the landing on this. Vision tracking accuracy is very good, without some of the fidgeting that we’ve seen in the past, in other systems that do the same.

In the future, we feel like this opens the door for more accessibility across a wide range of impairments and disabilities — but more on that as time goes on. There’s a reason why I know what vision cuts from a stroke and hemianopsia are, after all.

Apple has been stealthily training users on Apple Vision Pro Gesture Controls for a long time. The gestures aren’t that different than on the iPhone, iPad, and Magic Trackpad on the Mac.

So, in short, if you can use gestures on your iPhone, migrating to the Apple Vision Pro will be easy. That is, easy as long as you can adjust to doing the gestures in the air.

Actually using the Apple Vision Pro

Thanks to my host, I got to use the one of the apps that they’re developing. This particular app trains the users on an industrial process that demands absolute procedural compliance.

Failure in any step can result in damage to a multi-million dollar piece of equipment that could result in failure of other pieces of equipment, or injury to the operator or technicians nearby.

The system in question is already simulated in entirety on the Apple Vision Pro. The pass-through cameras are detailed enough that in a room-light situation, the procedural steps can still be read looking down at a book.

In this simple demonstration, the Apple Vision Pro directs you to the next step, be it a control panel operation or valve manipulation, and shows you where the next step must take place. At present, it doesn’t watch for the action, or require a simulated action by the user, but I’ve been told that they believe that they can use the Apple Vision Pro cameras to watch for proper operation.

At present, there aren’t any QR codes in the manual or any way to take longer than the tutorial allows between steps. The developers of the app I spoke to say that they will see if that can be implemented, or live text-reading from the book using the Apple Vision Pro cameras can be done in the future.

So, in summary, the headset allows the wearer to train without fear on a process that if not followed properly can result in damage to the machinery, or injury to the user. And it does it all without harm to either, even if things go terribly wrong.

Safari and web-browsing is… fine

Early testers already got to use Safari in a limited fashion. I can confirm that it works, as long as nearly every single web standard is followed.

Some narrow type styles don’t read well on the headset. Some design elements just don’t work on the headset very well. Infinitely scrolling websites including Apple’s product pages also don’t read well, and some avant garde designs with custom user interface elements just aren’t navigable.

I don’t think that this is going to cause a revolution or devolution in web design, for no other reason than Apple Vision Pro won’t be a major player in browser share. It is something that web developers might want to keep in the back of their heads, though, and assess as the platform evolves.

iPad apps are a good gateway, but custom ports will be needed

As part of my testing, I used a few of my favorite iPad apps. The virtual keyboard works fine, but takes some getting used to. I’ve struggled here to describe how it takes some getting used to, but words fail — suffice it to say that you won’t be typing as fast on Apple Vision Pro as you do on your iPad, MacBook Pro, or external keyboard.

Every productivity app we tested works fine. In most cases, though, you should consider if the Apple Vision Pro is the best place to use the app. For instance, PDF readers work fine, but the iPad is a better platform for most to read on, versus on a floating window on your Apple Vision Pro.

Games are still rough, and Game Center is effectively illegible — but I expect this will clear up with time. Touch actions aren’t always being captured, and this is fine for strategy games for the most part, but terrible for timing-sensitive ones.

Unfortunately, the environment I was testing in didn’t allow for use of the Mac Virtual Display — but I’ve been told it works well.

In the early days of the iPad, most of the apps available were iPhone apps, upscaled for the iPad. This doesn’t feel much different. Apps that use the key features of the new platform will fare better, and be better user experiences.

Ultimately, hammering those apps into shape is the main reason why we think that the Apple Vision Pro has been announced when it has, in the given form-factor and price. More on that thought in a bit, though.

Apple Vision Pro audio is designed to keep you in your environment

The Apple Vision Pro speakers are small, and it’s clear that Apple has moved what it has learned from AirPods and even MacBook speakers to the headset. Audio is clear and crisp, and sufficiently loud for media consumption.

What it is not, is all-encompassing. Apple has made a conscious decision to keep the audio off-ear, like in the Valve Index, to keep the wearer grounded in the space.

This makes the audio experience more like when you’re listening to media on your computer, versus on AirPods Max or the like.

This is fine, and by design. Apple has made it clear that it doesn’t want to isolate Apple Vision Pro users from other people or surroundings, and this is about the best way you can do that with a headset.

It’s not clear if there’s a way to use AirPods in conjunction with the Apple Vision Pro, and I’m not sure if Apple wants you to anyway.

Apple Vision Pro hands on — Battery life

Whatever the Apple Vision Pro battery ends up being called, it’s relatively unobtrusive in a pocket or on a desk. Battery life is still a little rough probably because of device analytics at the moment, with us getting a bit over a hour of use without external charge. We’re expecting this to radically improve as the software evolves.

The USB-C port works as a pass-through like the MagSafe Battery Pack does with Lightning and the iPhone — it will charge the battery and power the headset simultaneously, with a 60W USB-C PD charger. A 30W USB-C charger will power the device fully or charge the battery, and less than that will supply some power, but not enough to keep the battery topped off.

And again, this is something that developers will ultimately decide, but most use cases we’ve seen so far are mostly stationary. I don’t think it’s going to be a major crisis to keep the headset attached to a USB-C power source near constantly.

The Apple Vision Pro is not weightless

The headset in use weighs a hair over a pound on the head. It’s balanced well with just the back-strap especially after you crank on the knob to get the tightness right. Over the course of the hour and a half or so I got to use the headset after setup, some light neck fatigue set in. Other headsets like the Valve Index are worse, though.

Given the use of other headsets, at this point, I was expecting some mild eyestrain to set in. Semi-surprisingly, I didn’t get any, but that may be attributable to the display tech that Apple is using, or some other undocumented Apple tech shenanigans.

We’ve seen images of a top-strap, but I didn’t have one available to use. I think that this is more for bigger hair or differently-shaped heads than mine, more than for limiting fatigue.

And, it’s a little warm in practical use. Inside the headset, it’s a bit warmer than the exterior air, and that becomes obvious after about an hour — but not uncomfortable.

Cool air is drawn in to cool the electronics through vents on the bottom of the headset and exhausted through the top vents. This appears to be done with a very small fan, with Apple engineering keeping the fan quieter than ambient noise.

Apple Vision Pro is a glimpse of a future, but not everybody’s

As it did with the iPhone, there are other products (mostly) in the same category as the Apple Vision Pro. Apple has never needed to be first to something. It’s always taken the time, and iterated on a concept internally, before releasing a genre-defining product.

The Apple Vision Pro defines the genre for sure — at the very-high end. This time, intentionally, they aimed too high on cost for the consumer.

Instead, the company has decided to make aspirational technology. The Apple Vision Pro, as it stands, I feel is what Apple wants the market to be shooting for, going forward.

It will immediately be adopted in enterprise. It will take more time and convincing for the general public.

There’s still a lot of work to do on the operating system and presentation, and understandably so. The headset is a phenomenal piece of engineering, and to completely exercise that technology, the operating system and development tools are going to need a lot of iteration not just before launch, but for years afterwards.

Just like the iPhone did, and does.

Apple won’t be the ones who say who and what the Apple Vision Pro is for, nor will it for what we expect we’ll see in about two years in an Apple Vision non-Pro headset.

Developers are the one who are ultimately going to tell the true tale of what to use the Apple Vision Pro for. And, it’s good that they have the hardware now, and not five days before launch.

And like with the iPhone and iPad, Apple Vision Pro won’t be the best tool for everything, and everybody. I still feel reading and surfing are better on iPhone, iPad, and Mac, for instance, and other apps will require a more conventional interface.

But, like I got the incredible privilege to test, things like industrial training could be spectacular when fully implemented on Apple Vision Pro. It’s easy to see medical implementations coming to the Apple Vision Pro to learn and perform treatments, perhaps guided by a professional, and other tasks being ported to the Apple Vision Pro.

And, I’m very excited to see how 3D video recording and playback work, when they’re eventually available. Full FaceTime Persona generation looks like it will be impressive, but we’ll see with time.

Apple says the Apple Vision Pro will become available in early 2024. Let’s be patient and see what pops out, even if they don’t make that date, because what’s already been delivered to a select few is impressive enough.