Developers evangelize Apple Vision Pro labs in latest update

Apple has shared a handful of developer testimonials after their day-long Apple Vision Pro labs, suggesting they are a “proving ground” for the future of spatial apps.

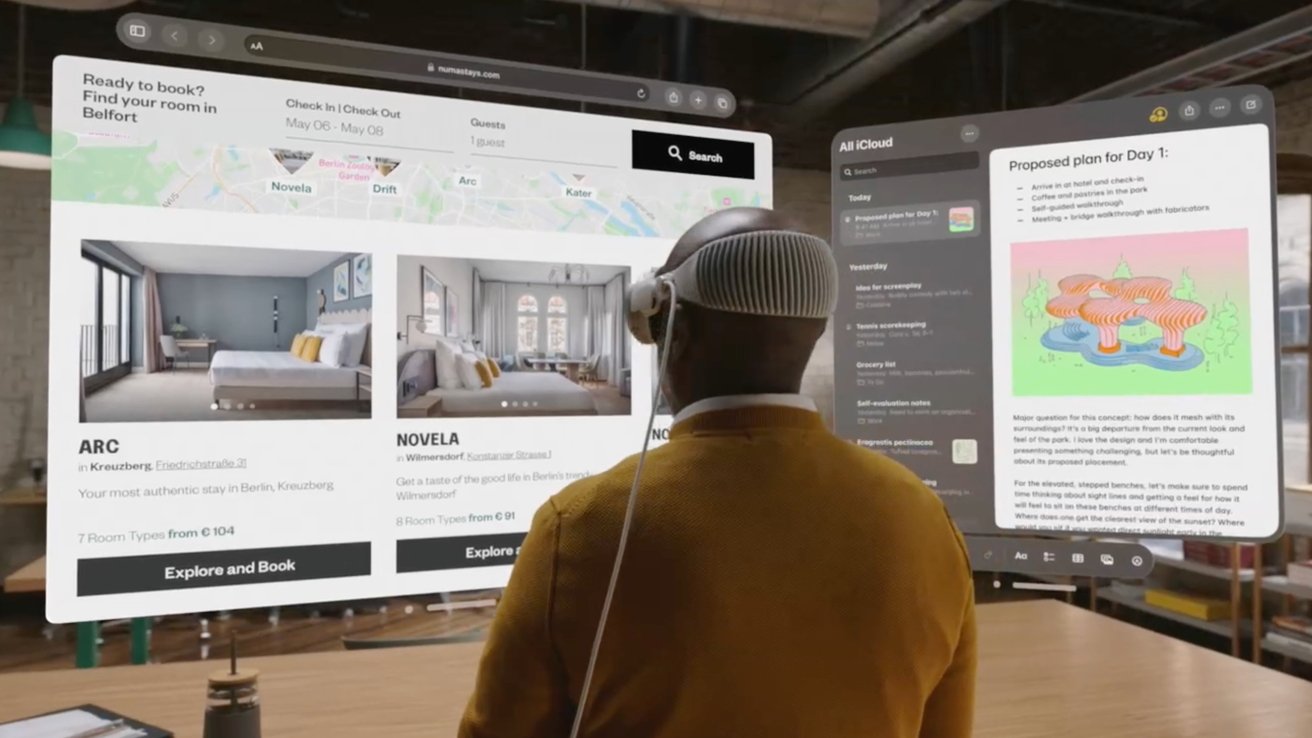

Spatial Computing requires developers to rethink their apps for 3D space, which is difficult since Apple Vision Pro doesn’t launch until 2024. So, Apple is holding labs for developers to test the device across six cities around the world.

Reports suggested these labs haven’t been well attended, which has led to Apple sharing a brief set of testimonials from three prominent developers. The creators of Fantastical, Widgetsmith, and Spool.

Flexibits is the company behind Fantastical, and CEO Michael Simmons shared that using Apple Vision Pro at a lab “was like seeing Fantastical for the first time.” He described the experience as “a proving ground” and was able to see many ways to expand Flexibits apps beyond their current display borders.

“A bordered screen can be limiting. Sure, you can scroll, or have multiple monitors, but generally speaking, you’re limited to the edges,” Simmons says. “Experiencing spatial computing not only validated the designs we’d been thinking about — it helped us start thinking not just about left to right or up and down, but beyond borders at all.”

Widgetsmith creator David Smith, also known as Underscore in some circles, walked away from Apple Vision Pro labs with a handwritten pageful of new ideas. Getting “the full experience” with Apple Vision Pro hardware gave Smith plenty to think about versus running apps in a simulator.

“I’d been staring at this thing in the simulator for weeks and getting a general sense of how it works, but that was in a box,” Smith says. “The first time you see your own app running for real, that’s when you get the audible gasp.”

The chief experience officer at Pixite tested the video creator and editor Spool at the labs. This allowed him to test different interaction points on Apple Vision Pro, where the app normally requires display taps — no such interaction exists on the Spatial Computing device.

“At first, we didn’t know if it would work in our app,” Guerrette says. “But now we understand where to go. That kind of learning experience is incredibly valuable: It gives us the chance to say, ‘OK, now we understand what we’re working with, what the interaction is, and how we can make a stronger connection.'”

Chris Delbuck of Slack also went to test the iPadOS app in Apple Vision Pro. He came away thinking that Slack needs a full 3D app rather than a simple iPad port.

Labs aren’t the end-all for developers, as David Smith said about the experience. Every problem wasn’t solved, but enough was learned that he can now focus on solutions he’ll need.

Speaking of testimonials, we at AppleInsider were able to get our hands on an Apple Vision Pro in the wild. We share how it stacks up versus other headsets and what’s different about Apple’s entry.

Apple continues to offer labs across Cupertino, London, Munich, Shanghai, Singapore, and Tokyo for developers to attend in person. There’s also an opportunity to get a developer kit shipped directly, but those are in limited supply and require quite the security detail.