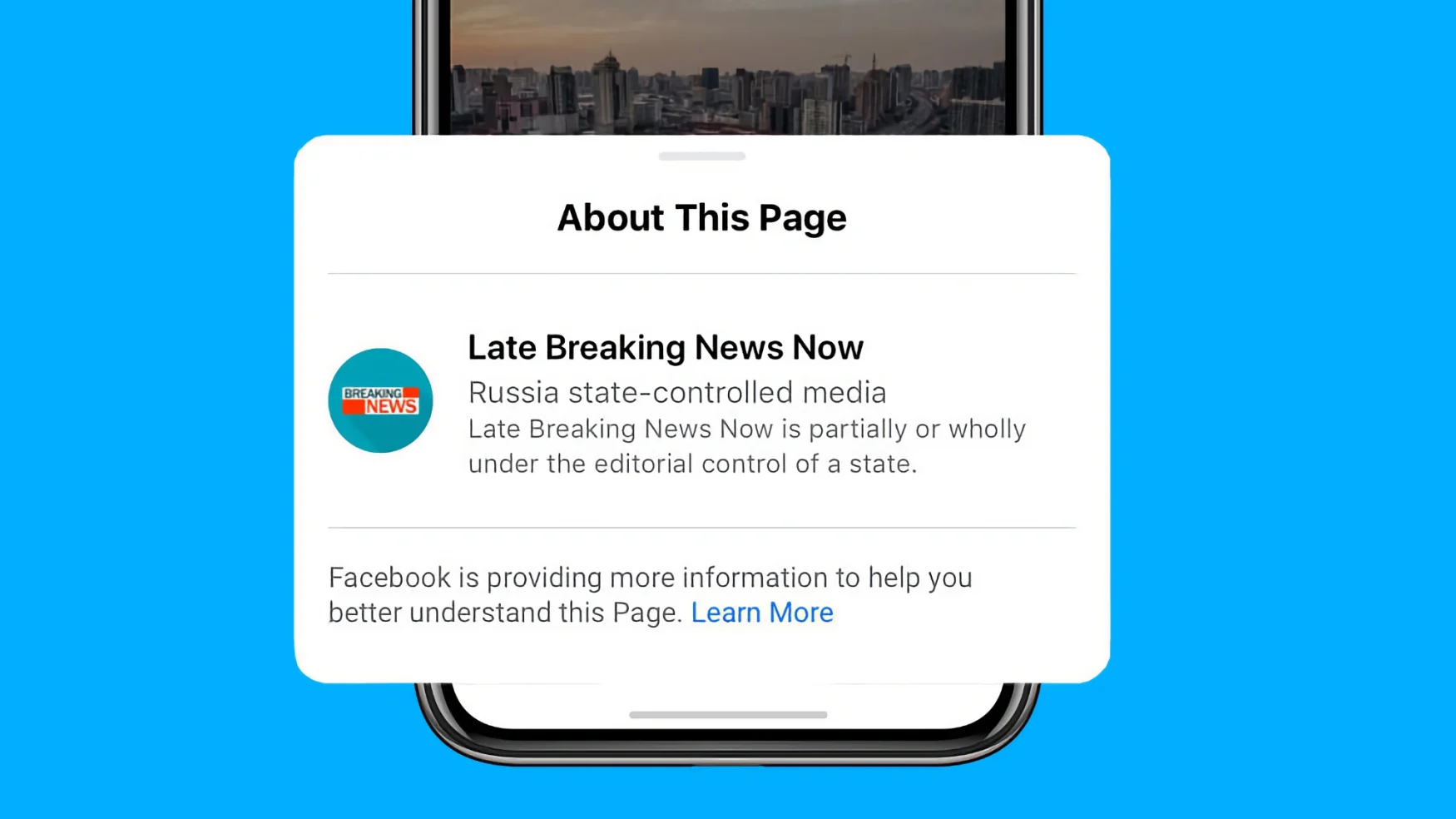

Facebook’s ‘state-controlled media’ labels appear to reduce engagement

Facebook’s “state-controlled media” labels appear to reduce engagement with content from authoritarian nations. A new study reveals that, with the added tags, users’ engagement decreased when they noticed content labeled as originating from Chinese and Russian government-run media. However, the labels also appeared to boost user favorability of posts from Canadian state media, suggesting broader perceptions of the country play into the tags’ effectiveness.

Researchers with Carnegie Mellon University, Indiana University and the University of Texas at Austin conducted the set of studies which “explored the causal impact of these labels on users’ intentions to engage with Facebook content.” When users noticed the label, they tended to reduce their engagement with it when it was a country they perceived negatively.

The first experiment studied 1,200 people with US-based Facebook accounts — with and without state-controlled media labels. Although their engagement with posts originating from Russia and China went down, it only had that effect if they “actively noticed the label.” A second test in the series observed 2,000 US Facebook users to determine that their behavior was “tied to public sentiment toward the country listed on the label.” In other words, they responded positively to media labeled as Canadian state-controlled and negatively toward Chinese and Russian government-run content.

Finally, a third experiment examined how broadly Facebook users interacted with state-controlled media before and after the platform added the labels. They concluded the change had a “significant effect” as the sharing of labeled posts dropped by 34 percent after the shift, and user likes of tagged posts fell by 46 percent. The paper’s authors also noted that training users on the labels (“notifying them of their presence and testing them on their meaning”) significantly boosted their odds of noticing them.

Subscribe to the Engadget Deals Newsletter

Great deals on consumer electronics delivered straight to your inbox, curated by Engadget’s editorial team. See latest

Please enter a valid email address

Please select a newsletter

By subscribing, you are agreeing to Engadget’s Terms and Privacy Policy.

“Our three studies suggest that state-controlled media labels reduced the spread of misinformation and propaganda on Facebook, depending on which countries were labelled,” Patricia L. Moravec, the study’s lead, wrote in the paper’s summary.

However, the studies ran into some limitations in determining correlation vs. causation. The authors say they couldn’t fully verify whether their results were caused by the labels or Facebook’s nontransparent newsfeed algorithms, which downlink labeled posts (and make related third-party research exceedingly difficult in broader terms). The paper’s authors also note that the experiments measured online users’ “beliefs, intentions to share, and intentions to like pages” but not their actual behavior.

The researchers (unsurprisingly, given the results) recommend social companies “clearly alert and inform users of labeling policy changes, explain what they mean, and display the labels in ways that users notice.”

As the world grapples with online misinformation and propaganda, the study’s leads urge Facebook and other social platforms to do more. “Although efforts are being made to reduce the spread of misinformation on social media platforms, efforts to reduce the influence of propaganda may be less successful,” suggests co-author Nicholas Wolczynski. “Given that Facebook debuted the new labels quietly without informing users, many likely did not notice the labels, reducing their efficacy dramatically.”