Intel Launches Sapphire Rapids Fourth-Gen Xeon CPUs and Ponte Vecchio Max GPU Series

After years of delays, Intel formally launched its fourth-gen Xeon Scalable Sapphire Rapids CPUs, in both regular and HBM-infused Max flavors, and its “Ponte Vecchio” Data Center GPU Max Series today. Intel’s expansive portfolio of 52 new CPUs will face off with AMD’s EPYC Genoa lineup that debuted last year. The company also slipped in a low-key announcement of its last line of Optane Persistent Memory DIMMs.

While AMD’s chips maintain the core count lead with a maximum of 96 cores on a single chip, Intel’s Sapphire Rapids chips bring the company up to a maximum of 60 cores, a 50% improvement over its previous peak of 40 cores with the third-gen Ice Lake Xeons. Intel claims this will lead to a 53% improvement in general compute over its prior-gen chips, but largely avoided making direct comparisons to AMD’s chips during its presentations. However, Intel has provided samples to the press for unrestricted third-party reviews, so it isn’t shying away from the competition.

Sapphire Rapids leans heavily into new acceleration technologies that can either be purchased outright or bought through a new pay-as-you-go model. These new purpose-built accelerator regions of the chip are designed to radically boost performance ins several types of work, like compression, encryption, data movement, and data analytics, that typically require discrete accelerators for maximum performance.

Despite having a clear core count lead, AMD doesn’t have similar acceleration features for its Genoa processors. Intel claims an average of a 2.9X improvement in performance-per-watt over its own previous-gen models in some workloads when employing the new accelerators. Intel also claims a 10X improvement in AI inference and training and a 3X improvement in data analytics workloads.

Intel’s Sapphire Rapids, which comes fabbed on the ‘Intel 7’ process, also brings a host of new connectivity technologies, like support for PCIe 5.0, DDR5 memory, and the CXL 1.1 interface (type 1 and 2 devices), giving the company a firmer footing against AMD’s Genoa. We’re hard at work benchmarking the chips for our full review that we will post in the coming days, but in the interim, here’s a brief overview of the new lineup.

Intel 4th-Gen Xeon Scalable Sapphire Rapids Pricing and Specifications

Intel’s Sapphire Rapids product stack spans 52 models carved into ‘performance’ and ‘mainstream’ dual-socket general-purpose models, along with specialized models for liquid-cooled, single-socket, networking, cloud, HPC, and storage and HCI systems. As a result, it feels like there’s a specialized chip for nearly every workload, creating a confusing product stack.

Those chips are then carved into various Max, Platinum, Gold, Silver, and Bronze sub-tiers, each denoting various levels of socket scalability, support for Optane persistent memory, RAS features, SGX enclave capacity, and the like.

The Sapphire Rapids chips also now come with a varying number of enabled accelerator devices onboard, which vary by SKU. We’ll dive into the different types of accelerators below. But, for now, it’s important to know that each chip can have a various number of accelerator ‘devices’ enabled (listed in the spec sheet above), with multiple devices for each type of accelerator available per chip (think of the number of ‘devices’ as akin to accelerator ‘cores’).

Users can buy chips that are fully loaded with all four devices for all four types of accelerators enabled, or they can opt for less expensive chip models with a lower number of enabled devices and then activate them later via a new pay-as-you-go mechanism called Intel on Demand. The ‘+’ models have at least one accelerator of each type enabled by default, but there are two classes of chips with two different allocations of accelerators. We cover those details in the next section.

The new processors all support AVX-512, Deep Leaning Boost (DLBoost), and the new Advanced Matrix Extensions (AMX) instructions, with the latter delivering explosive performance uplift in AI workloads by using a new set of two-dimensional registers called ’tiles.’ Intel’s AMX implementation will primarily be used to boost performance in AI training and inference operations.

As before, Intel’s 4th-Gen Xeon Scalable platform supports 1, 2, 4, and 8-socket configurations, whereas AMD’s Genoa only scales to two sockets. AMD leads in PCIe connectivity options, with up to 128 PCIe 5.0 lanes on offer, while Sapphire Rapids peaks at 80 PCIe 5.0 lanes.

Sapphire Rapids also supports up to 1.5TB of DDR5-4800 memory spread across eight channels per socket, while AMD’s Genoa supports up to 6TB of DDR5-4800 memory spread across 12 channels. Intel has spec’d its 2DPC (DIMMs per Channel) configuration at DDR5-4400, whereas AMD has not finished qualifying its 2DPC transfer rates (the company expects to release the 2DPC spec this quarter).

The Sapphire Rapids processors span from eight-core models to 60 cores, with pricing beginning at $415 and peaking at $17,000 for the 8490H. The flagship Xeon Scalable Platinum 8490H has 60 cores and 120 threads, with all four accelerator types fully enabled. The chip also has 112.5 MB of L3 cache and a 350W TDP rating.

Sapphire Rapids’ TDP envelopes span from 120W to 350W. The 350W rating is significantly higher than the 280W peak with Intel’s previous-gen Ice Lake Xeon series, but the inexorable push for more performance has the industry at large pushing to higher limits. For instance, AMD’s Genoa tops out at a similar 360W TDP, albeit for a 96-core model, and can even be configured as a 400W chip.

The 8490H is the lone 60-core model, and it is only available with all acceleration engines enabled. Stepping back to the 56-core Platinum 8480+ will cost you $10,710, but that comes with only one of each type of acceleration device active. This processor has a 3.8 GHz boost clock, 350W TDP, and 105MB of L3 cache.

Intel Xeon Sapphire Rapids Accelerators

Intel’s new on-die accelerators are a key new component for its Sapphire Rapids processors. As mentioned above, customers can either purchase chips with all of the accelerator options activated or opt for less expensive models that will allow them to purchase accelerator licenses as needed through the Intel On Demand service. Not all chips have the same accelerator options, which we’ll cover below.

Intel hasn’t provided a pricing guide for the accelerators yet, but the licenses will be provided through server OEMs and are activated via software and a licensing API. Instead of buying a full license outright, you can also opt for a pay-as-you-go feature with usage metering to measure how much of a service you use. This feature will likely be popular among CSPs.

The idea behind this service is to allow customers to activate and pay for only the features they need, and also to provide a future upgrade path that doesn’t require buying new servers or processors. Instead, customers could opt to employ acceleration engines to boost performance down the line. This also allows Intel and its partners to carve multiple types of SKUs from the same functional silicon, thus simplifying supply chains and reducing their costs.

These functions represent Intel’s continuation of its long history of bringing fixed-function accelerators onto the processor die. Still, the powerful units on Sapphire Rapids will require software support the extract the full performance capabilities. Intel is already working with several software providers to enable support in a broad range of applications, many of which you can see in the album above.

Intel has four types of accelerators available with Sapphire Rapids. The Data Streaming Accelerator (DSA) improves data movement by offloading the CPU of data-copy and data-transformation operations. The Dynamic Load Balancer (DLB) accelerator steps in to provide packet prioritization and dynamically balance network traffic across the CPU cores as the system load fluctuates.

Intel also has an In-Memory Analytics Accelerator (IAA) that accelerates analytics performance and offloads the CPU cores, thus improving database query throughput and other functions.

Intel has also brought its Quick Assist Technology (QAT) accelerators onboard the CPU — this function used to reside on the chipset. This hardware offload accelerator augments cryptography and compression/decompression performance. Intel has employed QAT accelerators for quite some time, so this technology already enjoys broad software support.

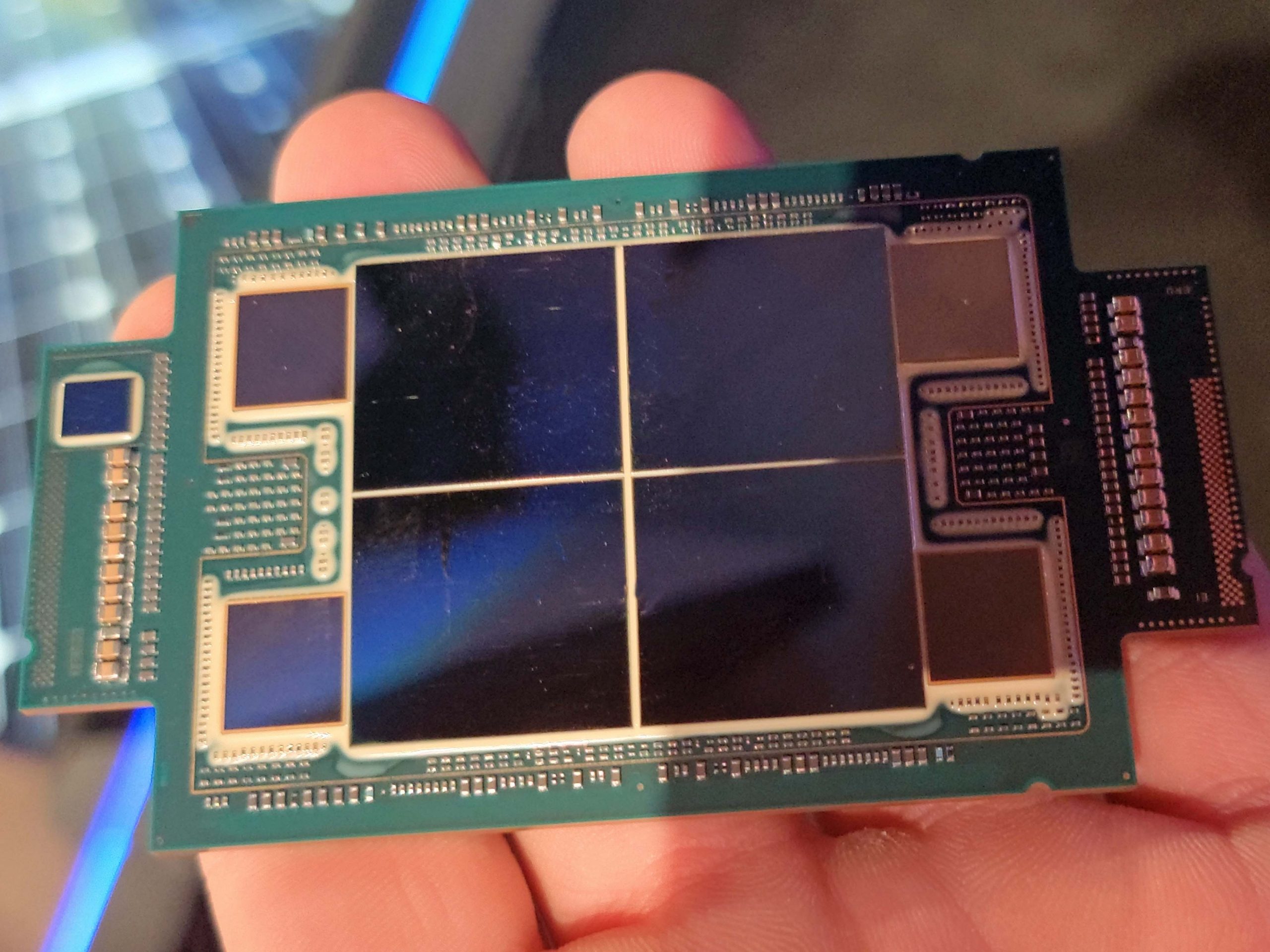

Unfortunately, the chips have varying acceleration capabilities — you can’t buy four ‘devices’ on all models. The Sapphire Rapids processors are comprised of two types of designs (Die Chops), as listed in the SKU table. The XCC chips are comprised of four total die, and each die has one of each accelerator (IAA, QAT, DSA, DLB). That means you can activate a maximum of four accelerators of each type on these chips (for example, 4 IAA, 4 QAT, 4 DSA, 4 DLB).

In contrast, some of the chips use a single MCC die, which only comes with one IAA and DSA accelerator and two QAT and DLB accelerators. That means you can only activate 2 QAT and DLB accelerators and one IAA and DSA accelerator (2 QAT, 2 DLB, 1 IAA, 1 DSA).

Intel Max CPU Series and Ponte Vecchio Max GPU Series

Intel recently announced several details about its forthcoming Xeon Max Series of CPUs and the Intel Data Center GPU Max Series (Ponte Vecchio). Today marks the formal launch.

Intel’s HBM2e-equipped Max CPU models come to market with 32 to 56 cores and are based on the standard Sapphire Rapids design. These chips are the first x86 processors to employ HBM2e memory on-package, thus providing a larger 64GB pool of local memory for the processor. The HBM memory will help with memory-bound workloads that aren’t as sensitive to core counts, so the Max models come with fewer cores than standard models. Target workloads include computational fluid dynamics, climate and weather forecasting, AI training and inference, big data analytics, in-memory databases, and storage applications.

The Max CPUs can operate in a multitude of various configurations, such as with the HBM memory used for all memory operations (HBM only – no DDR5 memory required), an HBM ‘Flat Mode’ that presents the HBM as a separate memory region (this requires extensive software support), or in an HBM ‘Caching Mode’ that uses the HBM2e as a DRAM-backed cache. The latter requires no code changes and will likely be the most frequently used mode of operation.

The Xeon Max CPUs will square off with AMD’s EPYC Milan-X processors, which come with a 3D-stacked L3 cache called 3D V-Cache. The Milan-X models have up to 768MB of total L3 cache per chip that delivers an incredible amount of bandwidth, but it doesn’t provide as much capacity as Intel’s approach with HBM2e. Both approaches have their respective strengths and weaknesses, so we’re eager to put the Xeon Max processors to the test.

Notably, Fujitsu’s A64FX Arm processor uses a similar HBM technique. The HBM-equipped A64FX processors power the Fugaku supercomputer, which was the fastest in the world for several years (until the AMD-powered exascale-class Frontier took over last year). Fugaku still maintains the second spot on the Top500.

Intel also launched its Max GPU Series, previously code-named Ponte Vecchio. Intel had previously unveiled the three different GPU models, which come in both standard PCIe and OAM form factors. You can read more about the Max GPU Series here.

Intel Optane Persistent Memory (PMem) 300

As part of its Sapphire Rapids launch, Intel quietly introduced what is the final series of Optane Persistent Memory DIMMs. The final generation, codenamed Crow’s Pass but officially known as the Intel Optane Persistent Memory 300, will come in 128, 256, and 512 GB capacities and operate at DDR5-4400. That’s a big step over the previous peak of DDR4-3200, but it also means that Sapphire Rapids systems will have to downclock the standard memory from the supported DDR5-4800 to DDR5-4400 if they plan on employing Optane.

Intel cites 56% more sequential bandwidth and 214% more bandwidth in random workloads, along with support for up to 4TB of Optane per socket, or 6TB total for a system. Just like the previous-gen Optane 200 series, the DIMMs operate at 15W. However, they now step up to a DDR-T2 interface and AES-XTS 256-bit encryption.

At its debut in 2015, Intel and partner Micron touted the underlying tech, 3D XPoint, as delivering 1000x the performance and 1000x the endurance of NAND storage, and 10x the density of DRAM, but the technology is winding its way to an end. Intel has already stopped producing its Optane storage products for client PCs, which makes sense as it is selling its NAND business to SK Hynix.

However, Intel has retained its memory business for the data center, including its persistent memory DIMMs that can function as an adjunct to main memory — a capability only Intel offers. Now those products will also not see any future generations after the next-gen Crow Pass modules that arrive with Sapphire Rapids processors.

Intel cites an industry shift to CXL-based architectures as a reason for winding down the Optane business, mirroring Intel’s ex-partner Micron’s sentiments when it exited the business last year. Sapphire Rapids supports both Optane DIMMs and the CXL interface, but this will be one of the last times the two are seen together — CXL will be the industry’s preferred method of connecting exotic memories to chips in the future.

We are currently underway with our testing for our Sapphire Rapids review, so stay tuned for the full performance breakdown and architectural details in the coming days.